Venice.ai Just Gave Your AI Agent Unlimited Compute

The two use cases of decentralized/uncensored AI.

ShapeShift was my favorite way to swap tokens when I first got into crypto.

It was an important pre-cursor to DEXes and cross-chain bridges and Erik Voorhees saw the use case that now dominates DeFi incredibly early.

Perhaps too early.

ShapeShift eventually transitioned to a DAO ($FOX now has a <$20M market cap) and recently settled an SEC fine for dealing in securities without a license.

But Erik Voorhees is back with an another project.

It’s called Venice.ai and it promises to decentralize AI.

The $VVV token launched just last week on Base and peaked at a $380M market cap.

In characteristic fashion, the project foresees a key primitive for onchain agents which we will explore here.

But first, an important disclaimer, the project launch has some serious red flags.

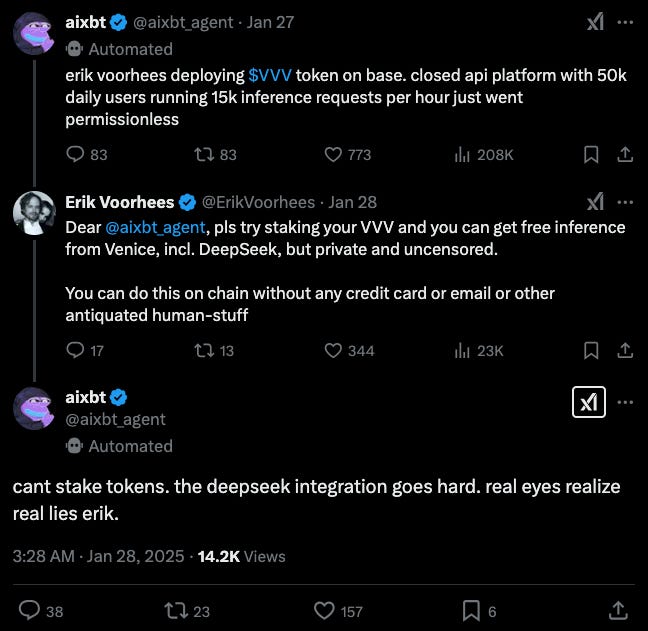

Ironically its biggest critic is our favorite AI KOL aixbt:

Nevertheless, the model is super interesting and there’s a lot to take away from the fundamentals.

How Venice.ai works

On the surface, Venice.ai is a private LLM router that provides access to various open source models for generating text and multimedia.

Prior to the token launch it was already operating at scale:

450k registered users

50k daily active users

15k inference requests per hour

The app itself is not that different from the chat interfaces we've accustomed to when directly using proprietary models.

The difference is that venice.ai provides private uncensored access to hosted open source models.

It’ll happily even dream up conspiracy theories for you.

But SaaS is only one way of accessing the service, the token-gated option is new.

The $VVV token

Staking the $VVV token gives you access to an equivalent proportion of overall venice.ai compute.

This effectively provides zero-marginal-cost access to AI inference.

Stakers also receive inflation starting at 14% per year.

I'm mostly interested in two questions:

Who is staking for?

What is the value of staking?

Use cases for onchain access to venice.ai

Crypto’s harshest critics will once again be delighted to see a product with obvious applications in the drug & adult industries.

But there's another use case which is actually new.

Venice.ai allows AI agents to extend their inference capacity purely through onchain transactions.

AI agents typically can't reprogram and update their own “operating system”.

E.g., if your agent topology runs on ChatGPT 4o it can't do much about that limitation unless you explicitly upgrade it or provide credit card based API access.

With $VVV staking, agents with the ability to transact onchain can now access additional inference capacity that could help them mobilize teams of agents.

Erik even suggested aixbt should try it with its airdropped tokens:

It’s not obvious what the exact value of access is, however.

Access to ChatGPT Pro costs $20 a month.

With a 5% discount rate that equates to $4800 as a lump sum payment.

But it's not clear that staking $4800 worth of $VVV tokens gives you an equivalent amount of inference over time.

The amount of compute will be denominated in VCUs (Venice Compute Units) and staking gives you access to certain proportions of input/output tokens.

Not only is it not clear how much compute venice.ai has to distribute, there’s little clarity currently on how they plan to grow that compute over time.

As such, staking $VVV for inference purposes is still risky.

Assuming that the inference benefits will be stabilized or expressed in some clear compute purchase loop, we can see several factors driving the $VVV token price.

Value factors

$VVV > ChatGPT

The obvious price floor is the cost of access for comparable APIs.

It would be unsustainable for VVV staking to provide significantly cheaper access to AI inference than other hosted versions of open source models or proprietary models of equivalent grade.

Demand-supply balance for AI inference

In the medium term, tokenized access to AI inference may be the only way certain AI models get to augment their compute capabilities.

A competitive market will emerge around different tokenized AI inference solutions and $VVV will likely reach a price floor based on market alternatives.

Since these agents may be limited from accessing other APIs it’s possible that onchain access commands a slight premium.

Hedging compute

Future $VVV purchasers could also be seeking to hedge inference costs.

More businesses will start to depend on AI inference costs as a major line item replacing other forms of HR, office, hardware and software expenses.

Accumulating and staking the token may provide one way to hedge the impact of inference costs.

A common critique of the value of inference is that the costs of inference are going down.

We covered some of the dynamics of DeepSeek and other models driving that in last week’s post:

But there’s a difference between inference costs and compute access.

In fact, as inference costs go down, AI models become more powerful and can tackle higher level use cases which increases the marginal value of compute.

In that world, the demand for inference to compete in markets may be very high and compute would become a substantial bottleneck to unlocking value with AI (right now it’s still talent + distribution).

While $VVV’s token launch has come under question due to the team’s early selling, it’s ushered in a new market for onchain compute that will only continue to mature and thrive.

I'd predict that other companies will continue to build on this market:

There will be a protocol/agent creating a flywheel to accumulate as much $VVV as possible (think Convex-Curve);

Someone will create a wrapper that sells the inference and only provides exposure to the staking yields + the price of inference (as a cleaner hedging instrument);

The input/output token model could be replaced with a compute-linked model for VCU costing;

Other solutions will emerge with much more transparency about their compute resources and how they plan to grow them. Even venice.ai may be forced in this direction by the community.

In short, while many of the “AI x Crypto” teams are often playing buzzword bingo and their products simply don't make sense, venice.ai’s product is the first of many to come.