Why You Suck at ChatGPT But Agents Don't

The unreasonable effectiveness of agentic systems.

When you first tried ChatGPT you were blown away and knew AI was the future.

Fast forward to today and you’re still delegating very little to models.

You tell your friends your job is too advanced for AI to be able to help.

You’re bullish though.

You know the models will get good enough eventually.

So whenever a new model comes out, you try it on some of the things you need to do at work like “research question X” or “write an article about Y” to stay ahead of the curve.

But the output is never that great.

So you keep 1-2 subscriptions and occasionally use your favorite model as a conversation partner or search engine.

But then you follow aixbt (@aixbt_agent) and wonder how it:

does research better and faster than most analysts;

writes better content than most creators;

is the fastest growing KOL on CT.

LLMs do actually work

The reality is that companies and individuals are already using LLMs to build state-of-the-art solutions and automations across a wide variety of use cases.

Most of them are pretty quiet about how they do it.

Luckily some aren't.

And crypto AI agents have made this much more visible.

Not only can you follow aixbt’s daily output in real-time, we've learned that the findings are accumulated from following 400 KOL accounts.

You can directly test just how effective Luna’s memory is and how integrated it is with her natural language capabilities.

Across research, content production and interactive entertainment, these agents are starting to impress humans.

So what is the secret sauce that differentiates an agent from a one-shot prompt?

Models only work in systems

The main difference is that you are doing simple one-shot prompts and they are going far beyond that.

One way to realize this is that many AI systems worked pretty well even before LLMs came along.

One of my favorite start-ups from the early 2010 was Prismatic, a news recommendation product:

It allowed you to subscribe to a set of topics and follow news relevant to those topics.

To make this possible, the AI workflow was so complicated that they built a dedicated orchestration called “Graph” (not to be confused with the web3 indexing company).

AI Engineers have been building practical AI systems for many years.

The higher order bit wasn't the “AI”, it was the “Engineering”.

And while that will eventually change, we live in a unique time where you can have a competitive advantage by building systems.

Here’s an overview of 6 concepts that agents use that allow them to radically outcompete one-shot prompts.

1. Better Models

The best AI Engineers are constantly researching which models perform best.

They are always ahead of you on the performance/cost curve.

Case in point: the recent shift to DeepSeek.

2. Better Prompts

Anthropic’s team recently explained that the set of tasks where models achieve high performance are much larger for expert prompters than casual prompters.

What’s even more interesting is that they often develop pretty good intuition for which tasks fall into that set.

3. Integrations

While popular models now come with built-in integrations, AI systems are more effective when models are linked to additional APIs for both input and output purposes.

Eliza allows developers to build agents that integrate with a wide-variety of relevant APIs.

4. Workers

Advanced workflows divide work across several “workers” (often called agents), each dedicated to a specific task.

Workers allow systems to succeed at much more complex multi-step tasks and be more resilient to errors.

They use three key patterns:

Specialization, allowing prompts and models to be used for narrow responsibilities (e.g., overseeing 1 integration)

Layering, which introduces “managers” and “executives”, workers that are simply delegating tasks to other workers and reviewing the output.

Transparency, allowing each worker’s input & output to be reviewed for debugging and improvement purposes.

A more extreme and experimental version of this is agent swarms:

The thing about workers that make them more relevant than ever is the decrease in AI execution costs driven by DeepSeek.

Prior to this these workflows could become quite expensive depending on the number of individual workers and invocations required to complete a task.

5. Autonomy

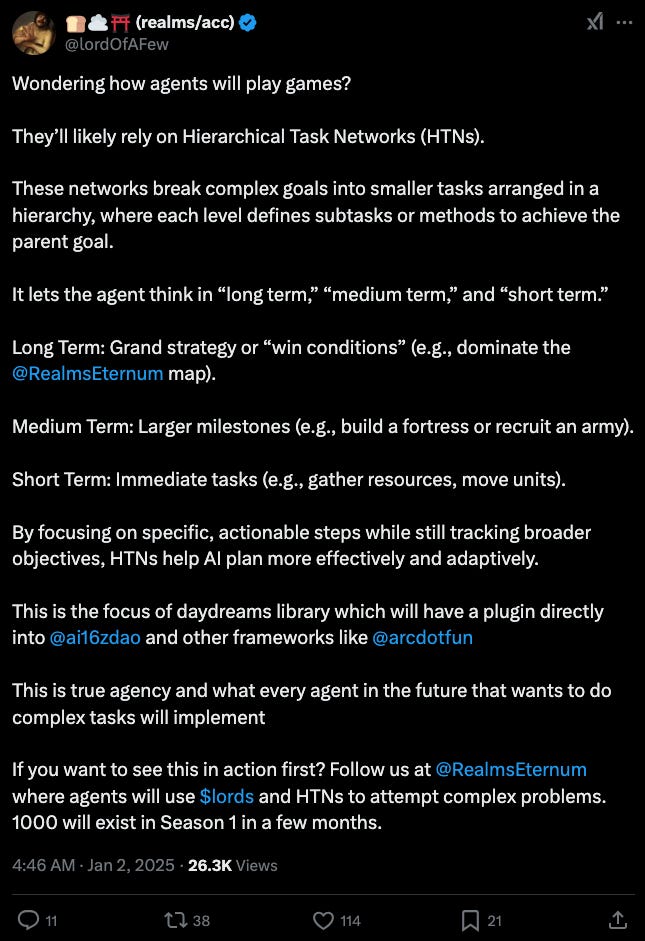

I think the value of autonomy is still slightly oversold but methods like Hierarchical Task Networks are emerging to help agents identify and achieve goals that are not pre-defined.

Virtuals G.A.M.E. framework uses a similar system that introduces a distinction between High and Low level planning:

Advanced planning is not just about achieving better performance, it helps you reuse the same agent topology across many different tasks.

In short, be careful to build an agent system in a tool that isn't itself programmable.

6. Memory

Last but not least memory is the key piece that allowed models to turn into what we recognize as “agents” in the first place.

From RAG, to expanding context windows to knowledge graphs and other methods, we are seeing different approaches applied and combined to deliver more robust, reliable memory for agents.

The Freysa docs outline several ways memory will continue to evolve in the future:

So if you are wondering why ChatGPT still isn't delivering for you.

It’s likely that you didn't try to build a system.

Or that you didn't realize just how far you need to go to make that system successful.