Starknet: Ethereum's Dreadnought is Back

Can it seize the moment?

Disclaimer: This research report is supported by a grant from StarkWare. The views expressed are solely those of the authors and do not represent the views of StarkWare or their employers, clients or affiliates. This report is an exploration of the Starknet developer ecosystem and technology and does not constitute financial advice. Peteris and Auditless are $STRK airdrop recipients.

Thanks to dcbuilder, Fran Algaba, guiltygyoza, Itamar Lesuisse, Marcello Bardus, Matteo Georges, Mentor Reka, Moody Salem, Rohit Goyal, Sylve Chevet, Tarrence van As, the StarkWare team and the Starknet Foundation for providing valuable research input and/or reviewing versions of this article.

TL;DR Introduction Starknet was all but forgotten Starknet is emerging from strategic hibernation Why Starknet Exists (a.k.a. Why Should We Scale Ethereum) Ethereum is diamond blockspace Blockspace hardness matters for DeFi, the most important use case Ethereum is building digital international waters Ethereum has Bitcoin to thank for its philosophy Part I: Starknet is a Monolithic Rollup for zkFi DeFi + validity scaled execution + provable computation = zkFi Starknet settles to the most decentralized base layer Scaling with validity proofs at the L2 and beyond Provable computation adding trust minimized expressivity to DeFi DeFi is the Vertical No one has scaled trustless DeFi yet A modular monolith Starknet: Ethereum’s Fat zkRollup for DeFi Interop is fundamentally a distraction from DeFi innovation Starknet doesn't need horizontal scaling The future of interop is already ZK Starknet’s Stack is the Scaling Endgame Embodied Validity rollups are the endgame, Starknet is leading the way from day 1 Succinctness is more important than zero knowledge Succinctness scales vertically The cheapest rollups settle state-diffs ZKRs also have better trust assumptions than OR Proving Performance Starknet has made leaps in prover diversity Cairo has no match in prover performance Starknet is already scaling BlockSTM Parallelization Cairo native Block Packing (applicative recursion) State-diff compression and optimization Volition or “hybrid data availability” Part II: Cairo is the Developer Stack for Provable Computing Why Ethereum Needs Another Programming Language Gas costs of current EVM blockchains lead to low-level optimizations Solidity makes it impossible to stack abstractions Solidity is the standard, not the EVM MultiVM Cairo as the Proving Paradigm Language Cluster computing over distributed data sets GPU-based machine learning over neural networks Cairo as Provable Rust Developers cannot prove the standard library. Rust wasn't built for probability, and that's a good thing. Ethereum's Best Smart Contract Language? Zero-cost abstractions Traits and composition over inheritance Caveats Part III: Starknet's App Layer Reflects Its Innovative Architecture Why Starknet is the best place to innovate A rollup architecture that favors innovation Limited moats/flywheels of established competitors Don't forget Cairo Decoupling from the slow Ethereum EIP cycle Examples Autonomous Worlds Gaming and autonomous worlds pushing provable compute forward But will this actually play out in blockchains? Conclusion Improving Ethereum as the “Least Aligned Ethereum L2” The role of L2s and the risks of losing Ethereum alignment

TL;DR

Ethereum rollups are currently developing in a local optimum with most progress focused on stack modularization, good progress on resolving scaling bottlenecks of optimistic rollups or retrofitting the EVM to work on validity rollups, and very limited progress on “whole stack evolution”;

Starknet is one of the few teams developing an integrated upgrade candidate to the whole Ethereum product with native end-to-end provability through its custom language Cairo which enables a web2-grade developer experience. While developing an independent technology stack has its disadvantages, Starknet has achieved some of the lowest transaction fees of any L2 following the Dencun upgrade and is now at a critical point where its architecture's performance characteristics are a competitive advantage;

We believe that the natural path through which Starknet can express these advantages to developers is by embracing what we call the transition to “zkFi”: the rapid and inevitable embedding of validity proofs at every level of the DeFi stack. Along the way, Starknet will bring the scalability, developer experience and proving innovation benefits to crypto's most economically significant use case.

Introduction

Vitalik's famous Endgame post articulated a future where a multiverse of rollups at the limit combine to compete with monolithic “big block chains”. Since then, Ethereum scaling has largely progressed along a sacred, linear timeline.

The collective race to the Splurge has spurred a monoculture of colored rollups progressing through L2Beat's one-size-fits-all decentralization milestones and competing fiercely through developer support, on-ramps, and incentives.

Yet one community continued to defy the uniformity rat race altogether.

Following the Dencun upgrade, Starknet has emerged as having some of the cheapest transaction fees among Ethereum L2s and is starting to touch the inflection point on its scaling curve.

This wasn’t always the case.

Starknet was all but forgotten

StarkWare first earned its scaling stripes by launching StarkEx and achieving the highest trustless TPS of any L2 at the time. They partnered with pioneering appchain innovators like dYdX and Immutable and built high-volume exchanges for all major asset types. Their blueprint for Starknet had the potential to be Ethereum’s Dreadnought moment, a rollup design built entirely around the “big cannon” of STARK proofs. Some of the smartest builders flocked to form the early Starknet community.

But the act of generalizing StarkEx into Starknet, a general-purpose ZK rollup, wasn't nearly as smooth. The Cairo language had to be rewritten from scratch, and the sequencer suffered from outages and congestion. StarkEx's biggest partners soon lost patience and were quickly cornered by other chains. EVM-based rollups kept their go-ethereum-based tech stacks simple and overshadowed Starknet in performance, adoption, modularity, and user experience.

The rollup's architecture was a mismatch with the cost function of the day. Underoptimized state-diffs combined with small transaction batches and a developing prover resulted in high data availability (DA), validation, and proving costs.

Starknet is emerging from strategic hibernation

But these technology decisions were positioned for a post-Dencun future. Ethereum’s Dreadnought is back. As we started to analyze the last couple of years in perceived hibernation, we uncovered a series of tactical decisions putting Starknet on the trajectory of becoming the most efficient, trustless general purpose provable chain we have seen so far and perhaps the best answer to our big block competitors.

Why Starknet Exists (a.k.a. Why Should We Scale Ethereum)

The idea of scaling Ethereum in a sound and incentive-compatible way isn't just a theoretical exercise.

One of the major themes of this cycle, and in fact, the bear market that preceded it, has been blockchain scaling. Ethereum was caught well out of position in the last bull market, with users facing high double- to triple-digit gas costs for simple swaps, transfers, and mints, pricing many people out of regularly transacting.

Ethereum’s answer to this has been “We’re solving it at layer 2” ever since the community united around Vitalik’s “rollup-centric roadmap,” and significant progress has been made. But as we see even more “scalable” designs in the short run from alternative layer 1’s who focus on things like parallel transaction execution at the cost of higher node requirements, and even within the Ethereum ecosystem, we are starting to understand more and more what can be done with relaxed trust conditions and offchain compute, it's important for us to revisit why we are even building on blockchains, to begin with.

After all, if Solana post-Firedancer will have comparable performance to the Nasdaq itself, what is the point of something like Ethereum?

Why are we bothering to scale this slow base layer to begin with?

Ethereum is diamond blockspace

The answer lies in the concept of hardness, first articulated by Josh Stark in Atoms, Institutions, Blockchains. To quote Josh, beyond the literal “hardness” of the physical world and the “hardness” of human institutions, we have discovered a new source of hardness in blockchains:

Recently, we’ve invented a new way to create hardness: blockchains. Using an elegant combination of cryptography, networked software, and commoditized human incentives, we are able to create software and digital records that have a degree of permanence. If law, money, and government are the infrastructure of our civilization, then Atoms, Institutions, and Blockchains are some of the raw materials this infrastructure is built with. Just as an architect must carefully plan not only the design of a building, but the materials used to construct that design, so must we carefully consider the materials for our civilization’s infrastructure. But the civilization we are trying to build is stretching the limits of what those materials can do. It is becoming increasingly obvious that Atoms and Institutions alone cannot support the global digital civilization we strive towards. This is the problem that blockchains solve. They are a new source of hardness, with new strengths and weaknesses, which make them a suitable complement to address the limitations of Atoms and Institutions.

All of the complex scaling research and engineering, as well as all of the effort and concern put into keeping the MEV supply chain as decentralized as possible through efforts like PBS (Proposer-Builder Separation), inclusion lists, and PEPC (Partial Execution Payload Caching), is aimed at keeping node requirements for both validators and verifiers (eventually light client verifiers) of Ethereum as low as possible.

You should be able to verify the Ethereum chain from your smartphone soon, and with innovations in DVT (Distributed Validator Technology), the capital requirements for Ethereum staking should decrease to the point where home stakers with affordable hardware and internet connections can participate in securing the network.

Blockspace hardness matters for DeFi, the most important use case

At $100b in TVL, >$5b in protocol fees and >10M Daily Active Users, DeFi is inarguably the use case for blockchains besides money itself that has the most product-market fit right now, and we’re not only seeing the “crypto-native” DeFi space growing rapidly, but also more constructions that sit somewhere between DeFi and CeFi start to move onchain, like centralized stablecoins, real-world assets, and institutional onchain securities trading.

As more of TradFi comes onchain, it will very likely begin to highlight the actual differences between implementations, how decentralized a system is, or to quote Josh again:

A much more efficient version of TradFi is possible with semi-self-custodial systems and semi-permissioned blockchain applications. This is likely the path that Solana and other high performance layer 1’s will find the most traction at their app layer in the long run.

But at a certain point, it questions what we are trying to achieve with blockchains in general and DeFi in particular. We foresee a repricing of blockspace in some way over the coming cycle, as centralization risk is always realized in a moment of crisis (which there will be more of).

It will be driven by precipitating events (like economic exploits of standalone PoS L1s or censorship attacks related to MEV) and economic necessity. Economic necessity is the unique feature of permissionless public blockchains and smart contracts as opposed to centralized (even somewhat verifiable and self-custodial) solutions or CBDC platforms like India’s UPI (which, as Tarun Chitra has pointed out, is a rudimentary smart contract system in and of itself).

Ethereum is building digital international waters

This brings us back to Ethereum. Ethereum’s north star is building digital international waters, a maximally decentralized bulletin board capable of credibly supporting hyperstructures that can last generations.

AllCoreDevs and the Ethereum client diversity are unmatched. The decision to keep smart contract execution and DA together and not just go the route of Celestia is part of Ethereum’s own monolithic leadership at those layers of the stack. Scaling execution of most things except rollup plumbing and whale transactions to L2 and beyond is where Ethereum’s modular strategy comes into play.

All of the major debates and design discussions within Ethereum – from PBS, to PEPC and inclusion lists, to how account abstraction is implemented, to client and stake diversity – are highly optimizing for decentralization, trustlessness, censorship resistance, composability, and longitude (backward compatibility of the EVM, guarantees about hardness).

Ethereum and its diverse ecosystem of chains and applications make tradeoffs to maximize those properties, including making execution more complex and scaling an entire design space of its own. But complexity usually leaks somehow, and in Solana that complexity is rather internalized for the core devs at layer 1 and the app devs who need to deal with a more complex DX.

Ethereum has Bitcoin to thank for its philosophy

At the core of this decentralization bias from Ethereum is inherited from its evolutionary ancestry as a Turing-complete Bitcoin: small validating node hardware requirements, including compute, storage, and bandwidth, promoting accessibility of maximally decentralized and dispersed home staking. Ethereum full nodes are about 5x cheaper to purchase and run than Solana nodes, and it accepts a much slower 12 second block time versus Solana’s continuous block building, delegating responsibility for end-user chains to layer 2 and above.

Solana is still able to rightly claim that they are a decentralized, permissionless system on some spectrum of geographic validator distribution, token distribution, social layer, and increasing client diversity, and will point out that the ever-increasing pace of hardware development could allow them to handle a 100,000 or 100,000,000x increase in blockspace demand and to extend their fully synchronous state machine to billions of users.

The architecture of the modern web, however, suggests that asynchrony is the fundamental design pattern of web services and that synchrony is the exception, not the rule. Indeed, while atomic composability is really helpful for certain clusters of use cases (like DeFi), optimizing the entire system for that one property inarguably increases validator hardware requirements without using validity-proven rollup-style architectures. Similar forces of technology that could push Solana node requirements down while throughput up to match exponential demand would also accrue to efforts to scale a modular ecosystem like Ethereum, including efficiency in prover hardware, the further miniaturization of compute and storage, etc. The points on the tradeoff curve have largely been chosen by the monolithic blockchains and Ethereum.

This is the fundamental philosophical tradeoff, and while there are impediments to the full realization of the Ethereum home-staking, light-client cypherpunk future, it is a future worth fighting for.

Ethereum-rooted systems inherit some or most of Ethereum’s hardness properties. Like any other design space, a spectrum of ways to scale decentralized applications has arisen: from general purpose layer 2s and layer 3s to app-specific ‘rollapps’, to coprocessors and offchain computation and alt DA options like Validiums and Volitions. But all of these extra scaling abstractions, in the end, are only worth it if you get what really only Ethereum can offer you: diamond blockspace. If Bitcoin is digital gold, Ethereum blockspace is digital diamonds, the hardest thing in the universe.

Part I: Starknet is a Monolithic Rollup for zkFi

DeFi + validity scaled execution + provable computation = zkFi

One can think of the immediate opportunity sitting in front of Starknet as zkFi – the combination of true DeFi (emphasis on the “de” part - starting with the base layer it settles to), scaled through validity proofs, and made maximally expressive by general provable computation. As mentioned, DeFi is the only use case for crypto that has strong PMF, and the halo effect of DeFi liquidity will be a powerful driver of DeFi adjacent or non-DeFi use cases.

DeFi with Ethereum guarantees is going to be premium/hardcore DeFi. During the most euphoric parts of the bull run, we will continue to see projects making compromises on decentralization in favor of raw performance because tradeoffs are hard, but ultimately decentralization is what distinguishes these protocols from CeFi/TradFi, a distinction that will become more and more clear as whatever the next set of centralized crypto chains and protocols are that will blow up when markets inevitably become unstable again.

Starknet’s opportunity is for its singular, scaled Ethereum validity rollup to foster a renaissance of DeFi innovation that is able to best take advantage of the efficient frontier of the decentralization and performance tradeoff curve. We already see the seeds of these next-gen DeFi protocols with the likes of Ekubo, an extensible AMM platform similar to Uniswap v4, AVNU, who are building a world-class swap aggregator with intent-centric trade execution and native-AA driven features like paying gas in USDC, and Pragma, whose oracles utilize aggregated STARK proofs for correctness and are consumed by Starknet DeFi protocols like zkLend, Nostra and Nimbora.

There are several keys to Starknet’s “zkFi” ecosystem, enabled by Cairo and StarknetOS.

Starknet settles to the most decentralized base layer

Scaling DeFi is easy if you make compromises. And while Starknet’s stack does provide for such compromises (Apex Finance is built on StarkEx as a Validium for now, as an example), Starknet in rollup mode will increasingly be an attractive option for trust-minimized DeFi settlement to Ethereum over time.

Other high-performance L1s will host their own “DeFi” ecosystems, but as this cycle’s froth waxes and wanes and things inevitably go wrong with “DeFi,” the maximally decentralized version of DeFi will start to stand out more and more as an attractive proposition for the global, sovereign crypto capital base.

Indeed, Ethereum mainnet’s enormous TVL and DeFi volume, even today, is evidence of this – global crypto-native capital trusts Ethereum and ETH to secure their value over any other ecosystem. As rollups lose their training wheels and scale up, they scale this value proposition and bend the tradeoff curve towards “fast and cheap enough” and fully decentralized. The word “DeFi” should come to actually refer to things that are decentralized over time, and the base layer that secures these protocols will re-emerge as a determinative factor.

Scaling with validity proofs at the L2 and beyond

DeFi protocols that scale on Starknet will potentially do so in a variety of ways (from L2-only contracts to L2+coprocessor constructions and recursive layer 3’s), with ZK proofs as the core glue tying them together. DeFi apps that take advantage of validity-proven scaling will be built with different assumptions than both their Ethereum L1 gas-constrained predecessors and their monolithic high TPS L1 competitors and will find a happy medium in the form of Cairo programs on Starknet and beyond.

Provable computation adding trust minimized expressivity to DeFi

A scaling stack and execution environment that is validity-proof-native comes with advantages that go just beyond scaling and extend to the general concept of validity-proven computation.

Ekubo AMM plugins can be designed to condition access based on some proof of offchain data, like your largest Binance trade or JPMorgan bank account balance or your historical trading history across Starknet and even other chains. Starknet programs can trustlessly query the state of other blockchains via Herodotus storage proofs or provide cross-chain voting capabilities like SnapshotX.

Starknet’s “autonomous worlds” fully onchain gaming sector is also showing what’s possible with arbitrary off-chain “prover servers,” which could enable the composition of a variety of web2 services whose state is proven and then used by smart contract applications on Starknet to provide a trust-minimized “bridge” between web2 and web3 DeFi, allowing for a potential explosion in the DeFi design space, all while using a single, purpose-built language for both smart contracts and arbitrary provable computation.

Being the L2 that scales Ethereum and raises the bar through winning DeFi and creating the next generation of enduring protocols and hyperstructures will be the base that gives Starknet’s technology stack the ability to travel beyond its own borders.

But it's not just scalability that makes zkFi possible on Starknet.

DeFi is the Vertical

There is never any one adoption curve in the diffusion of a technology as general and transformational as public blockchains and verifiable compute. And while DePIN, gaming, and decentralized social media are all incredibly exciting areas of growth for crypto, there is already a $100 billion industry using crypto every day: boring old DeFi.

In DeFi, Crypto has gone beyond memetic stores of value to the early inklings of an alternative financial system, one based on self-custody, censorship resistance, credible neutrality and decentralization. As much as the early traction of DeFi has helped shed light on other opportunities for crypto beyond finance, DeFi itself is still far from mature, and the opportunity is far from realized.

DeFi, in its purest form, also stands most strongly up to the “why does this even need to be onchain to begin with?” test, insofar as you view the inherent value of permissionless DeFi protocols as not just a function of their ability to address today’s speculative, self-referential token economy, but also that the market structures themselves inherit these crypto-ethos guarantees which clearly distinguish their value from more centralized solutions.

Some decentralized social media may be possible without blockchains, for example, with things like DIDs and the Bluesky protocol, and asset tokenization could take off with legal and regulatory clarity and UX improvements over the status quo on fully centralized rails like CBDCs and private blockchains. But truly decentralized, permissionless finance, the original “hit” from crypto’s breakout DeFi summer, is the one thing we can comfortably say truly does need crypto.

No one has scaled trustless DeFi yet

Scaling DeFi in a way that preserves decentralization and minimizes trust has been a Herculean effort by the entire crypto industry. DeFi uniquely benefits from atomic composability and a shared execution environment due to MEV and liquidity fragmentation, DevX and network effects challenges. Ethereum’s roadmap, rollup stack strategies, and (and indeed Starknet’s) do provide for incredible async scale with Ethereum security, as well as potential solutions to the synchronicity problem (shared sequencing).

DeFi protocols also work best when they can easily interoperate, and while efforts like chain abstraction, omnichain tokens, and intent-centric bridging (not to mention the AggLayer) promise to make this app-level DevX, network effects and liquidity challenge more tractable over time in the short to medium run, direct colocation on the same execution environment is a boon to these DeFi villages.

On Ethereum, the closest we have to this is Arbitrum, with its impressive TVL and volume numbers rivaling nearly all other chains and L1s and a deep, post-token ecosystem that is beginning to develop the first inklings of “L2 lindy-ness” in DeFi, and Base has also seen a recent uptick after the 4844 upgrade (albeit driven to peaks by speculative trading).

We are getting closer to the point where the layer 2 ecosystem is mature, but in many ways, the industry, infrastructure build-out, and narrative around going beyond L2s themselves are putting the cart before the horse a bit.

4844 has certainly been a shot in the arm for L2 traction and narrative. But we know that the induced demand will eat up most of the efficiencies from these MVP blobs and that even with the full danksharding roadmap playing out, with actual mass adoption as the ambition, L2’s, which inherit the full security of Ethereum will need to ruthlessly optimize to remain competitive with their more relaxed counterparts. Ethereum needs its straight-up answer to the monolithic L1s that can get close enough in fees for DeFi use cases that the inheritance of Ethereum’s unique security and decentralization is no longer effectively made at the cost of UX.

A modular monolith

Appchains, in general, and rollapps, in particular, may very well be the endgame for certain applications, but the market may be prematurely rewarding projects for overcomplicating their mission by taking on the duties of both application and chain and losing some of the credible neutrality benefits of collaborating and composing with other apps on a more neutral playing field (say, a general purpose L2).

It will be interesting to see what kind of apps Hyperliquid and Aevo can attract to their hybrid appchain with third-party app models. Instead of tweaking consensus and execution environments infinitely to add some extra edge to their rollapp, app devs should be focused on building applications that are sticky and innovative and only reach for a chain when they absolutely need to from a scalability or decentralization perspective.

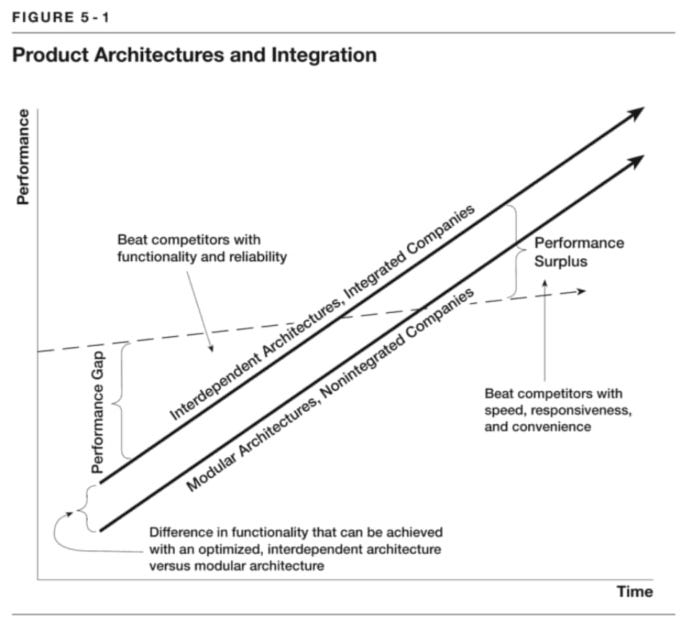

Modular vs. monolithic is kind of a false debate. Early on in the development of a particular component/market in technology, the “integrated” solution always wins first, and modularity comes later. Ethereum’s economic security and potential for a modular interface between the settlement layer and the execution layer was a key insight, but the metaphor has been overextended by teams who are essentially over-optimizing the modularity side before layer 2 itself is competitive with the top-tier integrated L1s.

Application-level innovation is what crypto in general and DeFi in particular need to take the next leap beyond yield ponzis and Uni-v2 forks. Teams trying to be everything – killer app and layer 2/layer 1 custom to their needs – are facing the same uphill battle for traction as pure app devs, with twice as many things to worry about.

While the full exploration of the scaling design space can and should be explored as crypto attempts to plumb every possible use case niche, the time is right for Ethereum’s own monolithic answer to Solana and SUI: a hyperoptimized layer 2 ecosystem that takes full advantage of Ethereum’s security and decentralization affordances while pushing scale and expressivity to the absolute limit. With Starknet and Cairo, we have exactly that.

Starknet: Ethereum’s Fat zkRollup for DeFi

Provable exogenous compute is the fundamental building block of Ethereum’s scaling roadmap. Fraud proofs and posting calldata were the first iterations of this, but there is almost no disagreement that state-diff based, validity-proven rollups will ultimately trounce all other constructions in the not-too-distant future.

Interop is fundamentally a distraction from DeFi innovation

Cross-L2 interop continues to make strides, but there are challenges, and since we are still trying to get app-level PMF on scaled versions of DeFi, all of the work and focus on this helpful but often a sideways motion into theoryland instead of enabling concrete use cases.

With the exception of cross-chain aggregation of things like DEX swaps, yield farming opportunities, and the like (a problem for which the fragmentation and increase of layers itself create the need for a solution), cross-chain interop is more about allowing users to traverse between competing DeFi ecosystems and improving throughput than having some kind of truly “chainless” experience.

We end up with competing interop implementations from the various chain and shared sequencing projects, resulting essentially in multiple “zones of composability” within various chain ecosystems, bridges, and shared sequencer projects looking to be “just one more standard, bro." In many ways, this simply recreates the game at a higher level of abstraction—interop then will just shift to the interop between interop zones.

These solutions also come with their own compromises, like homogeneity of implementation in the case of OP’s Superchain, and there are significant challenges to architecting robust shared sequencing due to the fact that chains that are atomically composable with each other usually need to lock state on each other’s chains, leading to more economic and game theoretic complexity and potentially capital inefficiency.

To be clear, this is a messy problem and one worth solving – this async and, in some cases, synchronous composability future is absolutely critical for scaling blockchains and we are not fudding the concept of bridging – in fact technology like ZK proofs will help even the crossing of zones of composability as trust minimized as possible, but for building a robust DeFi ecosystem, atomic composability and unfragmented user experience (the Solana promise) are just an overall better experience for now.

Starknet doesn't need horizontal scaling

The great part about Starknet is that it can scale itself to meet the market need for a “monolithic” home chain for DeFi with Ethereum guarantees. When Starknet protocols need additional scale, there are a number of extensions to the L2 itself that can be built, like L3s, coprocessors, and Volition architectures.

Investment in interop is important but isn’t a magic bullet for making valuable applications. Yes, bridging UX sucks today, but intents, storage proofs, and other innovations and abstractions can improve it, and many chain ecosystem players like Polygon are trying to create these zones of interop (including atomic interop), but it both largely feels like a problem that is going to solve itself, and something which is less important to the success of crypto and Ethereum than user and app-level PMF.

There are many ways to scale beyond L2 and many ways to solve interop. But chains focusing on enshrining a particular view of interop while research is still ongoing around topics like based shared sequencing, light client based bridging, and intents seems like a premature optimization, at least from the L2s themselves.

While these competing L2s try to solve every possible blockchain vertical, offer a stack for building your own blockchain, and define the topology of interchain communication, we think that Starknet has the opportunity to simply focus on scaling DeFi on one chain, settling on Ethereum. And besides…

The future of interop is already ZK

Of course, bridging is important, and Starknet is both well positioned to be an easy place to bridge to (increasingly so with the advent of intent-centric bridges) and for Starknet and the Cairo stack to be integral building blocks for cross-chain interop without Starknet even particularly prioritizing it.

The wider industry has been coming around to the inevitability of ZK in interop for a while now, and we are starting to see the fruits of “ZK bridging” finally being born, with things like the Celestia <> Ethereum ZK bridge operated by Succinct Labs, Polytope’s Hyperbridge, and Not Another Bridge’s intent-based bridge solution which replaces cryptoeconomic slashing with validity proofs to get the best of both worlds.

In addition to arguably better fundamental trust assumptions to an optimistic rollup (without the honest N assumption), validity rollups do not require the optimistic challenge window, allowing them the ability to offer faster trustless finality – a risk which in intent-centric bridges is no longer technically borne by end users, but which can be returned to them in better quotes as solvers need to price in less finality risk than with net settling intents in optimistic constructions.

Starknet’s bridging ecosystem is early, but all of the pieces are there, including the potential for proof aggregation à-la Polygon AggLayer, but in this case, much more driven by the community than a first-party strategy from the StarkWare team itself. Starknet and Cairo are already being brought cross-chain by the community, who are pushing the ZK tech to its logical conclusions.

Herodotus, for example, is offering its coprocessor services to prove any chain state on any other chain, with the “backend” proof infrastructure implemented in Cairo and the main Herodotus prover being built on Starknet. SnapshotX, which leverages the Herodotus coprocessor, is an example of an application-level innovation in cross-chain communication rooted on Starknet, allowing users to vote for governance proposals on any chain, with settlement flowing through Starknet back to Ethereum regardless of where the original vote was signed.

In the end, ZK-rollups have the best interop properties. Starknet is well positioned to solve them when they become important, but nothing is more important than apps on the L2 itself for now.

Starknet’s Stack is the Scaling Endgame Embodied

So, back to basics: How do we scale L2 and optimize it for DeFi so that Ethereum’s diamond blockspace can be best utilized?

It’s pretty clear to most people that the answer is validity rollups, particularly state-diff based validity rollups, and that ZK is the endgame. We think Starknet’s challenging approach of decoupling from the EVM while aligning with Ethereum will yield an extremely compelling long-term tradeoff curve that sets it apart, while at the same time bringing several short-term to medium term scaling improvements to market which will make it competitive in DeFi.

But first, let’s take a look at the different dimensions of what we mean by scaling Ethereum with rollups:

Cost per transaction for some average user operation, like a generic transfer transaction or a swap;

Speed of transaction UX from user POV, or the end-to-end experience of signing a transaction, receiving feedback from the UI and backend, waiting for confirmation and finality onchain;

Chain TPS capacity without outages, or the amount of concurrent transactions-per-second the chain is actually able to process;

Expressivity/functionality may have multiple dimensions, but “scaling Ethereum” also means plugging holes in what is possible on layer 1, with things like EVM rollups experimenting with “RIPs” to add additional EVM functionality like new curve precompiles before mainnet or more dramatic changes like what is possible with rollups like Starknet or Fuel who go beyond the EVM.

Validity rollups are the endgame, Starknet is leading the way from day 1

Despite the fact that the earliest rollup constructions theorized and put into production were fraud-proof/optimistic-based rollups, for some time now, it’s been a clear consensus in the Ethereum community that scaling layer 2 will end up with validity proofs as the core mechanism once the technology is good enough.

While the “zero-knowledge” side of validity proofs has stuck as the conceptual branding for the whole field of polynomial commitment scheme-based proof systems, it is actually the succinctness property of validity rollups that is responsible for their unique scalability properties.

Succinctness is more important than zero knowledge

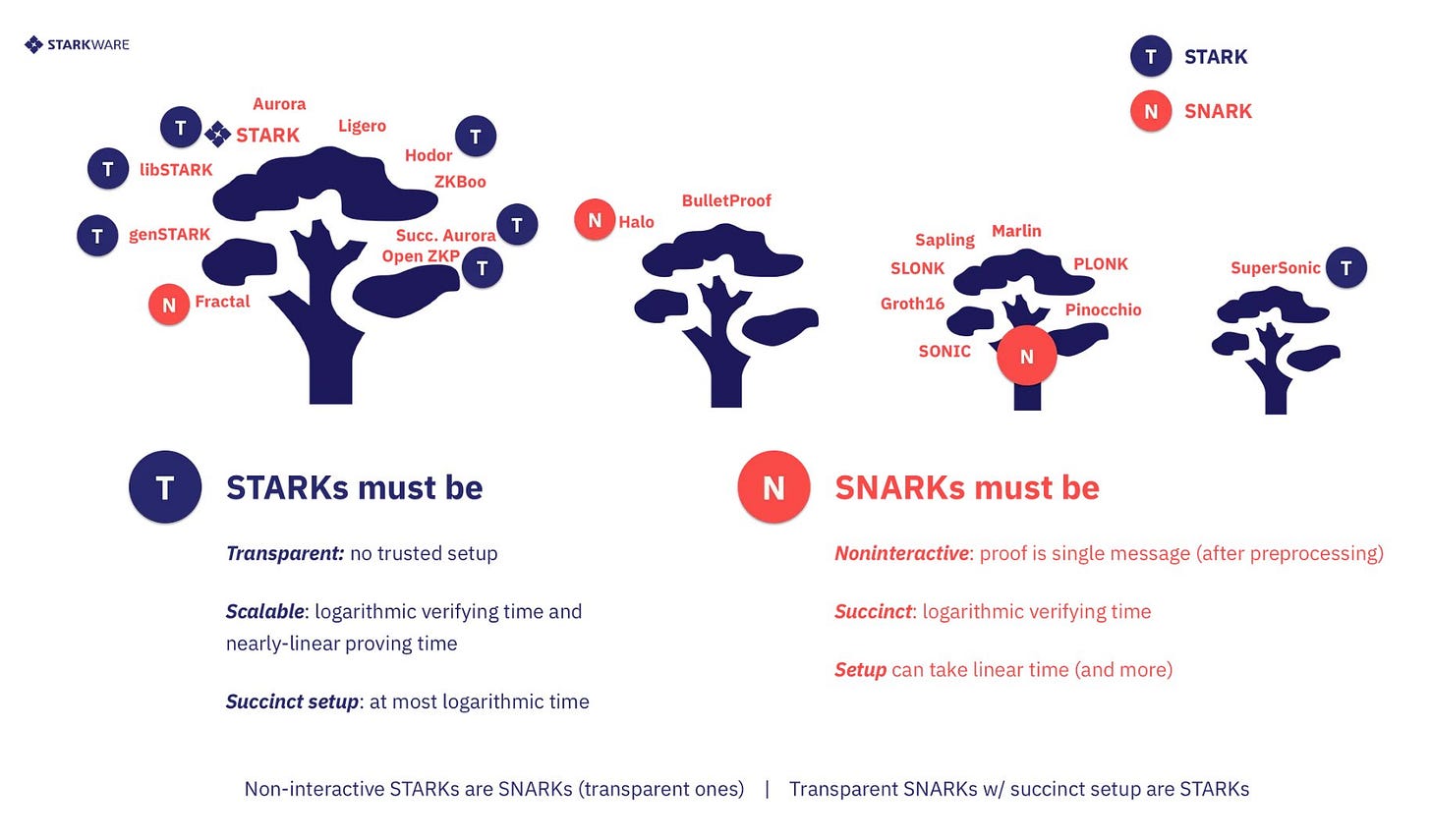

Both STARKs and SNARKs are variations on the concept of expressing our computation in the form of arithmetic circuits that can prove that a certain computation was done correctly without having to actually do the computation.

This splits the computation process into a “proving” stage (something that can be done with a beefy, even centralized machine called a “prover”), whereas the verifier (which, in the case of a rollup construction, is the L1 contract) is able to cheaply verify that the computation was performed correctly.

The exact properties of a given system vary depending on the underlying polynomial commitment scheme (FRI in the case of Starknet’s STARKs, for example, or PLONK in the case of Plonky2’s SNARKs), but the design goal is the same: define your computation in terms of arithmetic circuits which are provable in some amount of time/effort, but verifiable in a significantly shorter amount of time.

This is the “succinctness” property of validity proofs and essentially represents the “compression” that a validity rollup provides for computation relative to layer 1 execution.

While Polynya was contrasting validity proofs as an overall architecture versus monolithic parallel processing blockchains like Solana here, the same logic – that validity proofs obviate the need for blockchain re-execution and compress arbitrary computation into a single proof – points to the same core efficiency that validity-proven rollups have over optimistic rollups:

The validity-proven execution layer only needs to execute and prove once, so instead of a $5M computational network, you only need $X,000 for execution (because it’s a faster system, >$1,000). Even if the proving cost is 1,000x execution (they aren’t), it’s still cheaper. With proving costs plummeting over time, total compute costs are going to be a very small fraction relative to monolithic execution in the long term. Let me reiterate on that - of course, all of this is a long-term vision…Remember, validity proofs are malleable, can be recursed, folded etc. You can have real-time proofs, longer-term proofs, you can have thousands of executions layer recursing to a single succinct proof etc. – Polynya

Succinctness scales vertically

Recursive proofs, laid out in the v0.13.2 Starknet version update, not only allow you to compress the execution of one application or even one chain but of potentially dozens of applications, chains, coprocessors, and other sources of state changes in a singular, aggregated final proof.

While prover hardware and software design still have a ways to go, incredible progress is being made on the theoretical bleeding edge by the ZK industry with developments like Circle STARKs (a research collaboration between StarkWare and Polygon) and more efficient production proof systems like the StarkWare Stone prover and upcoming Stwo prover, which both should successively increase proving efficiency by several orders of magnitude.

The upper bound on how these validity-proven systems can scale asynchronously is infinite, and shared proving, in addition to potentially parallel proving, can achieve synchronous composability for applications that need it with favorable long-term economics.

Most of these benefits accrue to either STARK or SNARK-based systems, and indeed, the difference between the two is more about exactly which polynomial commitment scheme is being used and what its properties are. This diagram from StarkWare outlines the different flavors of succinct proofs broken into STARKs vs. SNARKs:

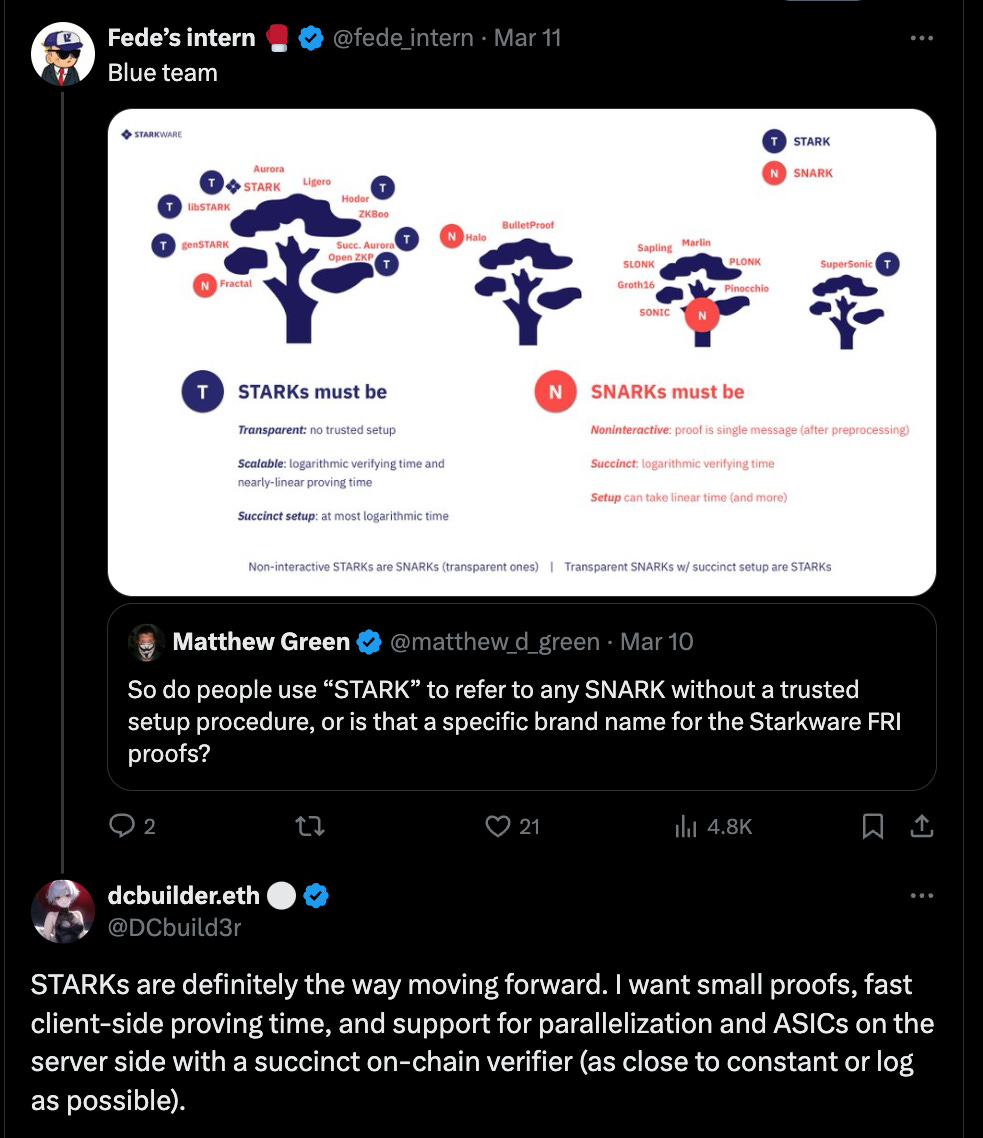

In general, STARKs are more expensive to prove but cheaper to verify, leading to the need for bigger prover hardware in exchange for the potential of even cheaper proofs for users on devices as small as smartphones. Another benefit of STARKs is that they require no trusted setup, something that all current SNARK systems depend on. But probably the most important property of the STARK side of the tech tree, and why it is likely winning out across systems outside of Starknet with Risc0 and Plonky3 both doubling down on STARKs as the future of their architectures, and that’s efficient client-side proving. DCBuilder puts it nicely here:

The cheapest rollups settle state-diffs

In addition to posting STARK proofs to L1 as the core mechanism by which Starknet inherits Ethereum’s security, Starknet and zkSync, in particular, have both chosen to only submit proofs of state-diffs, not proofs of the entire intermediate computation that may have occurred during their L2 transaction, thereby greatly reducing the surface area of what a user has to pay for. Bitget helpfully explains what users are paying for in a rollup and where each cost comes from:

[With posting only state diffs to L1] users are only paying for functions that changes L2’s storage slot in L1 instead of paying for all transaction data. State diff rollups are posting only overall change to the state from the batch of transactions (not just one transaction). This is why, if a user had an oracle that updated the same slot 100 times in a single batch, it is only charged for 1 update, because you only care about the last state of the storage slot. Users don't care about the 99 that were overwritten. Account Abstraction and State Diff:Quick Reminder: Users pay for three things on rollups: the Layer 2 (L2) execution fee, the Layer 1 (L1) calldata cost (for Data Availability), and the proof verification cost (specific to ZK-rollups) ERC 4337 and 4337 like protocol-level improvements (such as zkSync's and Starknet's Account Abstraction) introduce a new transaction type that includes two functions: Verification and Execution. In State Diff rollups, the verification process does not alter any slot in Layer 1 (L1). As a result, users only pay for the Layer 2 (L2) execution cost, instead of paying for all transaction data. Additionally, users benefit from the cost improvements associated with State Diff on the transaction execution side. - Cointime from Bitget

When combined with validity proofs, state-diff based rollups offer the most robust scalability properties regardless of the improvement curve of data availability, and as we see with the current cost breakdown of different rollups post 4844, Starknet’s relative execution costs are significantly lower than most other rollups, meaning that while the overall cost of Starknet is not yet the cheapest of all the L2s, there is a ton of optimization on execution that can be done with the same amount of DA bandwidth, which would lead us to believe that over the medium run, Starknet should meaningfully pull ahead as a consistent #1 or #2 cheapest Ethereum L2.

Anyone who comes close or maintains a lead at some times, like zkSync, is almost necessarily going to be both a A) validity rollup and B) a rollup that only posts state-diffs instead of calldata for every L2 transaction, and there is basically agreement on C) the fact that STARKs are indeed the endgame within that potential idea maze.

ZKRs also have better trust assumptions than OR

While the major optimistic rollups have yet to implement permissionless fraud proofs, introducing fraud proofs will bring ORs closer to their ideal state from a decentralization point of view (regardless of what is done with sequencing) and is an important step forward for the Ethereum rollup ecosystem. That being said, even when fraud proofs are live, validity-proven rollups still provide not only scalability benefits over ORs but also carry arguably more favorable trust properties.

In optimistic constructions, you typically rely on at least one honest actor in the system, whereas validity-proven architectures rely purely on math to verify computational integrity. While the optimistic assumption is cryptoeconomically sound, assuming correct implementation, and while Ethereum itself ultimately derives its security cryptoeconomically, the most trust-minimized way to scale Ethereum is to make it the only source of cryptoeconomic trust and rely on validity proofs rather than re-execution and an optimistic challenge.

This difference is only magnified when you take the comparison up a layer in the stack to L3s and introduce alt-DA. L3s built on optimistic rollup stacks, where the L3 gains some step-function improvement in cost by settling to the L2 are only made possible by introducing cheaper DA like Celestia or EigenDA. In an optimistic construction, this practically reduces the security of the L3 to that of the DA layer and not the L1 because a data withholding attack by the sequencer in the case of a compromised DA layer means that an invalid state transition could be forced, and funds lost. In the case of validity rollups and their version of the “L3,” introducing alt-DA (in a Validium or Volition construction, for example) does not allow for the possibility of an invalid state transition being included, only censorship and a liveness failure via the withholding of data.

Cryptoeconomic security and cryptographic security are not strictly in competition with each other, and particularly as ZK proving and proof aggregation is getting up to scale, adding CE may have benefits (see Brevis’ hybrid ZK-CE coprocessor). And indeed there are interesting game theoretical questions as to what the long-term equilibrium differential between these two modes of computational validity actually is. But fundamentally, wherever you can use pure math instead of game theory, your system is fundamentally more transparently secure and trust minimized.

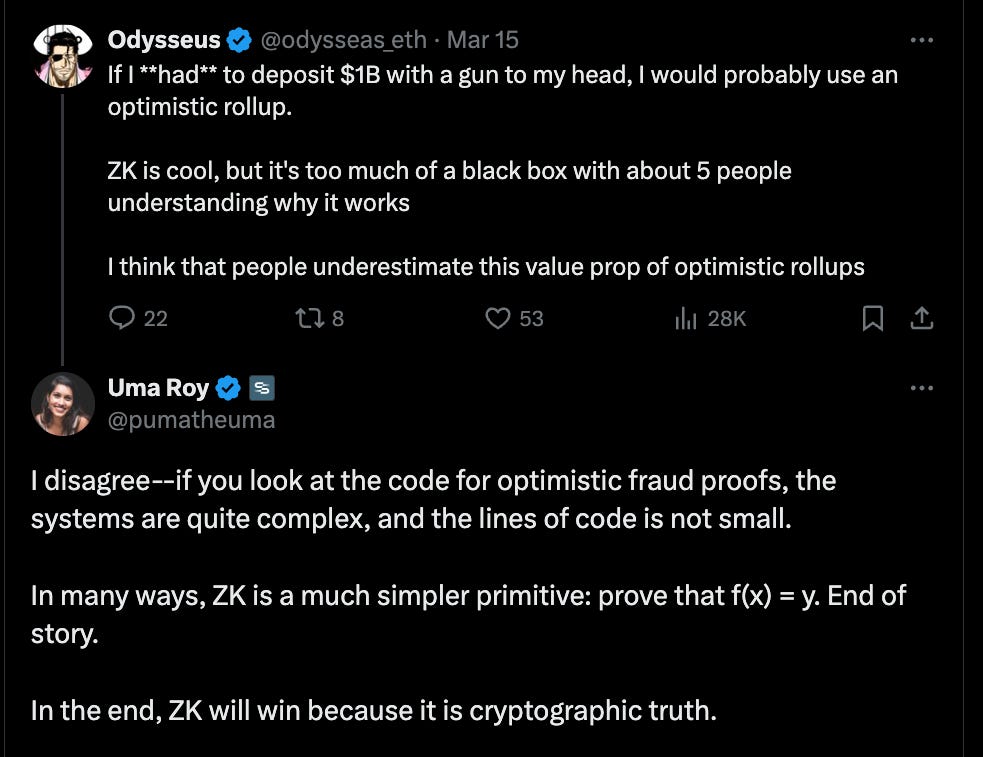

Finally, there’s a strong case to be made that fraud-proof mechanisms are actually quite complex when compared to proving and verifying computations inside a ZK-circuit. However, it's also reasonable to argue that ZK math and circuit design are even more inaccessible, with fewer experts able to fully understand them, compared to the already small pool of smart contract experts. As a result, ZK proofs may be just as complex overall. This is encapsulated well in the exchange here:

Ultimately, “trust the math” will obviously only work if more and more researchers and engineers in the space are able to reason about ZK’s underlying fundamentals and trust intermediate abstractions like Cairo as secure implementations of the results that they are expecting. StarkWare and the Starknet Foundation can obviously do a lot to shed light on the assumptions of their implementations and new breakthroughs (like the Stwo prover) and hold it up to scrutiny by the research community while making it as accessible as possible for app developers and users.

Proving Performance

Proving costs are ultimately passed on to users, and Cairo excels in both prover diversity and prover performance.

Starknet has made leaps in prover diversity

In 2022, Starknet was often criticized for having a closed and proprietary stack with their STARK prover being closed source. The early thinking was cautious about changing that (quote from Eli Ben-Sasson below). The expectation was that the prover could be appropriated by large companies (such as cloud providers):

Licenses have been useful in the past in preventing abuse and free riding by various large entities that have not contributed to the development of the code/ecosystem but nevertheless wanted to reap the benefits of an ecosystem’s contribution.

This wasn't an imaginary concern; Amazon's strategy to build thin wrappers around open-source software and commercialize them traces back to the origins of Amazon Web Services:

Within a year, Amazon was generating more money from what Elastic had built than the start-up, by making it easy for people to use the tool with its other offerings. So Elastic added premium features last year and limited what companies like Amazon could do with them. Amazon duplicated many of those features anyway and provided them free.

In August 2023, however, StarkWare decided to open-source their Stone Prover under an Apache License. By that point, the Starknet Stack was already taking shape. Perhaps validated by Optimism's launch of the OP Stack or encouraged by the wonderful contributions of LambdaClass (among others), who stepped in and delivered several core components, including an execution engine, sequencer, and the Platinum prover, Starknet pressed on.

Cairo has no match in prover performance

While prover diversity is desirable for network decentralization, it doesn't guarantee performance by itself. To assess performance, there are few apples-to-apples comparisons. Comparing performance to zkEVM provers may be misleading as they are proving circuits for a virtual machine that wasn't optimized for it. Luckily, there is a recent trend of proving Rust code which happens (by design) to be very comparable to Cairo code.

Tarrence from Cartridge reported that the legacy Platinum prover by LambdaClass was nearly 3x faster than SP1s prover.

Given SP1’s favorable benchmarks to Risc0, this potentially makes the Platinum prover the leading prover for a Rust-like language. But that's not all. Recently, StarkWare announced that they are working on a new iteration of their prover called Stwo, which will incorporate Circle STARKs. At this point, the performance gap should become significantly larger.

As a critique of prover evolution, prover improvements don't always come without UX tradeoffs for developers. Stwo will change the native field element for Cairo, which hypothetically shouldn't affect developers who defaulted to higher-level data types in the first place but would affect anyone who was overly aggressive in optimizing their state-diffs through the use of felt252 types.

Starknet is already scaling

Endgames and next-generation provers are all well and good, but we are in a bull market, and there is a demand for DeFi right now that is up for grabs. The introduction of EIP-4844 blobs has led to a healthy decrease in fees for all layer 2s but (as expected) has been affected by episodes of congestion and periods of high prices, particularly on Base. While the core devs work diligently to roll out the rest of the DA scaling roadmap, the L2s must find other ways to scale and achieve PMF. Though Starknet is not yet the cheapest, fastest, and most fully featured of the Ethereum rollups, it is on a path to potentially securing that crown in the short-to-medium run with some important improvements.

BlockSTM Parallelization

Parallelization has been one of the hot narratives of this cycle, and Starknet has become a “parallelized” blockchain with the addition of BlockSTM parallelization in Starknet 0.13.2. BlockSTM, a parallel execution engine for smart contracts built on the principles of “Software Transactional Memory,” was first developed for the Diem and Aptos blockchains. Thanks to this upgrade, Starknet can now process 5-10x more transactions-per-second (TPS), a significant improvement from the previous 100 TPS.

Cairo native

Another major improvement coming in Starknet 0.13.3 (Q1 2025) involves the incorporation of a major contribution by Starknet community members at LambdaClass - Cairo Native, with the help of Nethermind. Today’s Starknet sequencer executes transactions on the CairoVM itself, which is written in Rust, but with Cairo Native, we have an implementation of Cairo that is able to access the raw CPU opcodes via compilation of Sierra (Cairo’s IR) into MLIR native bytecode. By removing this layer of indirection for the sequencer, Starknet TPS capacity should also increase dramatically, potentially up to 10x on top of the potential 10x that BlockSTM parallelization introduces.

Block Packing (applicative recursion)

One of the core theoretical benefits of validity-proven rollups has always been the concept of recursive proofs, which is an inherent affordance of most succinct proofs - you can prove the proof of a proof (and so on). Starknet recently put this theory into practice in the 0.13.2 version update, where by a more abstract concept of Starknet “jobs” are used as the root of a new kind of recursive tree to submit state-diffs against rather than entire Starknet blocks. This essentially allows Starknet to amortize the cost of submitting state updates to Ethereum blobspace over multiple blocks, bringing costs-per-blob for Starknet down from ~215k to 74-98k gas, which means a 65% decrease in Starknet gas costs without any increase in DA bandwidth.

State-diff compression and optimization

As mentioned, Starknet only posts state-diffs to mainnet Ethereum, and while we described why this reduces overall DA usage, there is still a ton of optimization across the stack, notably in improving the compression of the state-diffs that Starknet is posting. This is tentatively planned for the 0.13.3 Starknet release.

Volition or “hybrid data availability”

Finally, the long-awaited “Volition mode” is coming, which aims to smooth some of the implications of the fact that Ethereum, despite the roadmap beginning to deliver some DA cost relief with Proto-Danksharding and phase 1 of Danksharding in ~1 year, DA remains a bottleneck in scaling and developers are starting to find alternative solutions in order to deliver a seamless experience.

Celestia has picked up a number of integrations in the past months, and as Avail, EigenDA, and other alt-DA layers arrive on the scene, and as even Bitcoin is starting to be considered as a potential DA layer with OP_CAT, the DA landscape for rollups has begun to expand beyond just Ethereum L1. Volitions allow app developers to specify where they want data to be posted for availability, be that Ethereum L1 (what we’d call the strict definition of an Ethereum rollup mode), an alt-DA layer like Celestia or Avail or even a post OP_CAT Bitcoin, or some strictly offchain server in what would be considered a Validium like StarkEx.

Volition makes it extremely ergonomic for developers to mix and match DA needs at the application layer, allowing for mixed constructions where developers and/or users can choose to ascribe more or less security granularly within their application logic. Essentially, this means separating out what L2 applications do for DA if they aren’t “important” enough to commit to L1 DA, as shown in this diagram from Starknet:

For example, trades under a certain amount could be posted by the application to a centralized but validity-proven server, and trades over a certain amount could have their data committed to Ethereum in a Starknet rollup batch.

This gives DeFi developers the option not to go “all or nothing” in choosing their DA to tune their scaling tradeoff curve—they can more granularly do that on a case-by-case basis, thereby reducing the friction to actually settling the most valuable and important state to Ethereum DA.

This doesn’t reduce the cost of Starknet in its full rollup mode protection, but it does give developers easy access to alt-DA for those operations that can afford to be less secure while still keeping parts of their application “secured by Ethereum.”

Part II: Cairo is the Developer Stack for Provable Computing

Why Ethereum Needs Another Programming Language

Georgios Konstantopoulos made a compelling point that the underlying limitations of the EVM cannot be blamed for the lack of innovation at the application layer.

One critique of this argument is that security tooling is held back due to EVM limitations. Early attempts have been made to get rid of dynamic jumps, which prevent a given smart contract from being cleanly decomposed in SSA form without some form of loop unrolling.

When building formal verification tools, this makes it a lot more difficult to bound computations that attest to various properties. Usually, these tools must make assumptions about how jumps work or simply bound their branching factor to make assertions viable.

But aside from security tooling, this argument stands: our compiler stack should be able to limit the leakage of VM details into our programming environment. So why was this tweet written, and why is there so little innovation at the EVM application layer? This is especially confusing as we have had much cheaper and wider block space availability recently than ever before due to the proliferation of L2s. Unfortunately, the limiting factor is Solidity. The language and compiler are highly leaky abstractions.

Gas costs of current EVM blockchains lead to low-level optimizations

Being a good Solidity engineer has become synonymous with being a “gas optimizer.” At its extreme, protocols like Seaport are almost exclusively written in Yul. Recent Solidity features, such as finally doing unchecked loop incrementation, indicate that it is firmly a language optimized for L1/mainnet deployments.

As a result, using it on L2 feels unnecessarily restrictive and not just from an optimization perspective.

Solidity makes it impossible to stack abstractions

Solidity was developed quickly and inspired by JavaScript and C++. As a result, the type system falls short of modern expectations. Not only does Solidity lack enums, generics, and other type safety features, but it also tries to compensate for these missing pieces in ad hoc ways, like by introducing user-defined value types. As a result, inheritance is highly risky because instead of composing property-checked composable code, Solidity simply appends imperative code.

As a result, Solidity libraries are very “flat” (this is also often recommended as necessary from a security perspective) and usually use OpenZeppelin contracts, maybe an ERC template, and write everything else from scratch rather than building on existing abstractions.

Solidity is the standard, not the EVM

To make it worse, the rest of the EVM ecosystem has canonicalized Solidity to the point where it's original architecture decisions are leaking through other parts of the stack. From jtriley:

At the time of writing, Solidity dominates the smart contract language design space. Client interfaces only implement Solidity’s Application Binary Interface (ABI), New Ethereum Improvement Proposals (EIPs) implicitly use Solidity’s ABI, debuggers operate on Solidity source maps, and even other smart contract languages start at Solidity’s base layer of abstraction and attempt to deviate from the same bedrock with minor syntax differences and backend improvements.

Despite these limitations, there are still some interesting bold initiatives like MUD, but they are few and far between. In short, the EVM is not the blocker; the ossification of Solidity is. Adding the ability to compile Sierra to the EVM would immediately give the Ethereum language a very powerful L2-facing smart contract language that would unlock app-level innovation.

MultiVM

Arbitrum Stylus is an upgrade to the Arbitrum Nitro stack that enables EVM-compatible smart contracts using programming languages that compile to Web Assembly like Rust. Stylus uses EVM's “hardware”, e.g., but has its own separate virtual machine. Arbitrum calls this paradigm “MultiVM” since contracts are entirely interoperable.

Stylus solves some of the problems with Solidity and the EVM by improving developer ergonomics (albeit with a heavy use of macros) and optimizing compute/memory costs. However, Stylus still leverages EVM storage and imposes additional burdens on validators (running multiple VMs) and even developers. Stylus developers have to rely on code caching for optimal performance and have to re-activate their contracts on a yearly basis. It is not possible to build persistent hyperstructures like Uniswap in Stylus. Last but not least, Stylus is built on Web Assembly which is a VM optimized for direct hardware execution (on the web) as opposed to provability.

Cairo as the Proving Paradigm Language

Whenever there is a new compute paradigm due to emerging types of “hardware”, it changes how software is developed and optimized.

This starts with developers figuring out how to develop code for this new abstraction manually. Eventually, new languages and paradigms are born that identify better abstractions that improve developers’ tradeoffs between high-level abstraction and performance.

Cluster computing over distributed data sets

One such transition occurred in cluster computing over distributed data sets. From the Google MapReduce paper:

Over the past five years, the authors and many others at Google have implemented hundreds of special-purpose computations that process large amounts of raw data. ..

The issues of how to parallelize the computation, distribute the data, and handle failures conspire to obscure the original simple computation with large amounts of complex code to deal with these issues.

..

As a reaction to this complexity, we designed a new abstraction that allows us to express the simple computations we were trying to perform but hides the messy details of parallelization, fault-tolerance, data distribution and load balancing in a library.

While initially, engineers wrote custom code to implement cluster computing jobs, MapReduce was introduced as an abstraction that allowed developers to leverage the inherent parallelism while writing only business logic.

GPU-based machine learning over neural networks

Early machine learning followed a similar trajectory where early pioneers would use GPU interfaces to encode neural network training.

TensorFlow and other higher-level APIs allowed developers to define neural network architectures agnostic of the underlying hardware. TensorFlow is particularly interesting because the team at Google developed MLIR, a multi-layer compiler framework that allowed them to seamlessly compile the same compute graphs to CPU, GPU, and even custom TPU training workloads.

There are some exceptions to this.

For example, the SQL language has captured the abstraction of querying so well that it is now used across various hardware configurations, ranging from local text files to databases and even blockchain data.

In client-side development, languages like ClojureScript, ReScript, and Purescript/Elm have been able to readily adapt existing backend languages to work well enough on browser-based JavaScript VMs.

However, current smart contract languages like Solidity are still very optimized for the specific constraints of EVM execution, while provable computing differs sufficiently from regular hardware programming to the extent that makes us optimistic about the ergonomic/performance tradeoff of using Cairo.

It's worth examining the prospect of using regular programming languages like Rust in a provable context, however.

Cairo as Provable Rust

Rust is fast becoming the favored language of the crypto community. Its focus on performance and safety while maintaining a high level of abstraction makes it ideal for the low-level development required in crypto. In fact, significant parts of the Starknet stack, ranging from the Cairo compiler itself to the various provers and sequencers, are currently written in Rust.

So, it's natural to wonder why we need Cairo at all. Why didn't StarkWare simply make Rust provable and call it a day?

This is an even more pertinent question in 2024 as capable teams like Risc0 and SP1 are working exactly on this problem.

SP1's documentation states: “SP1 democratizes access to ZKPs by allowing developers to use programmable truth with popular programming languages”. Within this statement lies part of the answer already. To make existing computer programs provable, it makes sense a priori to make their underlying languages provable. Unfortunately, this doesn't quite work in practice.

Developers cannot prove the standard library.

We could see a game studio deciding to use client-side proving of a C++ computation by compiling it to LLVM and using a dedicated prover. This is somewhat limited at the moment, however, as both Risc0 and SP1 support no_std Rust. In short, this is Rust with “batteries removed” – no standard library, common idioms, etc. This limits the amount of existing code that can be easily converted but the gap could close in the future as compilation targets like WASM are gaining more adoption.

Rust wasn't built for probability, and that's a good thing.

The issue with using Rust to develop new, novel blockchain applications like smart contracts, coprocessor queries, client-side proving logic, and many other layers is that it simply wasn't built for provability. Rust, more than many other languages, is a function of a stated design goal of careful memory management in systems development. Features like borrowing often get in the way of even seasoned programmers. What draws one of the authors to Rust for regular programming is actually the way the language incentivizes the development of performant code through its idioms.

One example of this is Rust closures (these are basically unnamed functions that are used as values). The developer pays a small price when using closures in abstractions (traits), having to explicitly spell out their generic type depending on the closure's behavior.

This is an example function that uses closures in the standard library:

In return, Rust heavily optimizes closure implementation (even inlining), compared to other languages which may default to allocating space for each closure on the heap. Some of the benefits of Rust's strict typing (such as the distinction between reference counted Rc types and Arc types) don't even show up unless you are writing concurrent code.

Cairo is an application of the Rust philosophy to the problem of provable computing. Consider the snapshot operator, an evolution of Rust's borrowing that reflects the trace layout of variables.

This program works in Cairo, but the analogy doesn't work in Rust. In Rust, you can't borrow a value that is later mutated, which helps with thread safety, for instance.

In Cairo, you can take a snapshot of a value because all variables are immutable in circuits. This is just one example of a more general idea—Cairo is built from the ground up using Rust-inspired syntax but in a way that facilitates circuit generation. It is philosophically more aligned with Rust than a blanket Rust to ZK transpiler.

It's even more difficult to implement smart contracts in Rust without creating layers of macros and domain-specific languages. This is why a Solana Uniswap V2 swap function is over 100 lines long.

Ethereum's Best Smart Contract Language?

The initial version of Starknet's native programming language Cairo (now known as CairoZero) evolved from a low-level circuit writing DSL into a hybrid language that supported some of Python's idioms while still leaking lower-level details.

The result was horrible to work with.

Here is some code from an ERC4626 implementation that illustrates some of the quirks of writing Cairo in the past: needing to provide builtins to functions, needing to explicitly allocate space for local variables, ugly error messages and complex variable rebinding, tuple-based return values, and more.

In short, CairoZero was Starknet's LLL moment, a language you had to tolerate, not enjoy. Something changed in late 2022 and early 2023, however.

Starknet quietly launched Cairo 1 and immediately catapulted it to one of the most ergonomic and performant smart contract languages.

jtriley.eth wrote about the imperative to fix Solidity when conceptualizing Edge:

Did you know, in a 2021 report on smart contracts (PDF) by Trail of Bits, it was found that around 90% of all EVM smart contracts are at least 56% similar, with 7% being completely identical? This is not a signal of lack of innovation, rather it is a signal that code reuse demands the most attention.

Clear semantics for namespaces and modules makes code reusable, parametric polymorphism (generics) and subtyping (traits) make reusable code worth writing, and annotations for all EVM data locations minimizes semantics imposed by the compiler.

Minimizing compiler semantic imposition improves granularity of developer control without relying on backdoors such as inline assembly. Inline assembly should always be enabled but outside of the standard library, its use should be considered a failure of the compiler. Practical inline assembly is used for a single reason, the developer has more context than can be provided to the compiler. This may be in the form of more efficient memory usage, arbitrary storage writes, bespoke serialization and deserialization, and unconventional storage methods.

The solution to these problems on Ethereum was lurking in plain sight already then. StarkWare has solved these engineering challenges and more while making a language that is natively provable.

With the compiler ported from Python to Rust, Cairo development and testing feel like working directly in Rust. And work on a native MLIR compiler (the same technology used to optimize Google's TensorFlow stack) suggests it'll get faster yet.

The language also has three things that Solidity desperately lacks today: zero-cost abstractions, compositional code reuse, and generics.

Zero-cost abstractions

Cairo inherits the most appealing abstractions from Rust's type system: structs and pattern-matched enums, which are often requested for the EVM.

Abstractions like these not only improve readability but also allow embedding security properties more directly into the smart contract code without requiring an annotation system like Certora and without the associated process overheads.

Cairo also has a recoverable error-handling system, which is a marked improvement over Solidity's bifurcated require and error type idioms.

Here's an example from the Cairo Book:

Traits and composition over inheritance

It's commonly understood that Solidity's liberal and obfuscated inheritance patterns can lead to significant security issues.

The recent ThirdWeb exploit was directly attributed to having a long and complicated inheritance tree.

The alternative EVM language, Vyper, simplifies this by preventing inheritance altogether. While it supports simple types of composition, this intentionally eliminates the opportunity for code reuse and abstraction.

Is there a fundamental tradeoff between extensibility and readability/safety?

Not really, Cairo offers a third path through trait-based inheritance and the ability to build up contracts compositionally from smaller state machines.

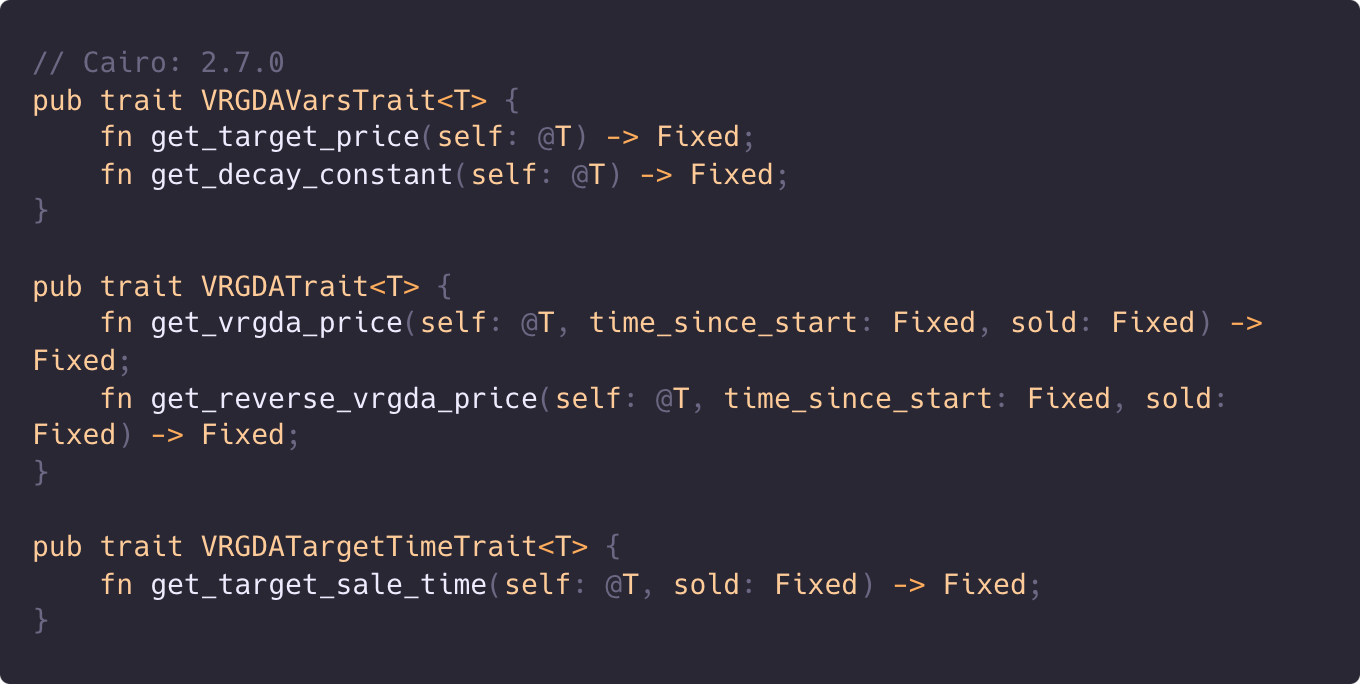

For example, here are some Dutch Auction traits from the Origami library:

These can be used to create modular code that uses Dutch auctions without depending on a specific implementation.

Modular composition is advocated by experts as the safest design pattern for extensibility.

Code reuse is further facilitated through Scarb, a package manager equivalent to Rust's Cargo. For example, the OpenZeppelin cairo-contracts library declares dependencies like so:

Solidity never really figured out its dependency management story.

The native package management solution didn't get traction, and developers are still torn between using node modules or GitHub submodules for dependency management, even in the Foundry community, which has been surprisingly consistent in developing conventions.

But modularity and composability do not exist only at the language level. Starknet supports contract classes analogous to pre-deployed contracts. This is the more efficient version of OpenZeppelin contract clones, allowing a single contract to be deployed only once and supporting upgradability natively.

Generics

In 2024, Solidity still doesn't support generic types, which, for example, means that OpenZeppelin must maintain individual implementations for each enumerable data structure.

Meanwhile, Cairo developers are already building libraries for tensor math:

Generics not only extend to data structures, but they also facilitate reusable security patterns and composable protocol architectures.

Caveats

Of course, Cairo is still a nascent language, and while it features a radically improved foundation for smart contract development, developer experience also comes down to the tooling.

Some Rust features are still missing from the language. For example, Aztec's Noir (a privacy-focused smart contract language) already implements function types:

The language desperately needs more security tooling, good debuggers, audited libraries for things like governance contracts, and many more components we'd come to expect from the more mature EVM ecosystem.

Part III: Starknet's App Layer Reflects Its Innovative Architecture

Why Starknet is the best place to innovate

StarkWare's Exploration team is called Keep Starknet Strange. This is no accident, Starknet is fast becoming the best place to explore highly innovative protocol ideas and for important structural reasons.

A rollup architecture that favors innovation

The Starknet rollup architecture makes compute and calldata very cheap, which is a completely different paradigm compared to L1 (“everything is expensive”) or even optimistic rollups (“calldata is very expensive and capacity is limited to manage state bloat, which sometimes makes calldata even more expensive”). The cost structure of operating on Starknet is most similar to regular computing.

How so? Isn't state cheap in regular computing but expensive on Starknet due to state-diffs being posted to L1? Well, state changes are not cheap in regular computing. To have stateful changes in your web application, you have to think about the following considerations (among many others):

Database selection, installation, and maintenance;

Data migrations. Just read Migrations and Future Proofing if you think schema changes are easy in web2. The complexity of doing this is what created the opportunity window for a language like Dark to be contemplated;

Orchestration and microservices;

Conflict resolution (among users or the application itself);

History and audit trails (e.g., event sourcing).

Storage costs in web2 are internalized to the company, and therefore, it's important to account for both external cloud storage costs (which are smaller) and internal development costs (which are much larger).

Limited moats/flywheels of established competitors

The competitive landscape on L2s is dominated by a number of “multi-chain” protocols, which enjoy very high adoption on every chain that they deploy on. This means that if you deploy a DEX on an L2, you often have to think about competing with Uniswap, Balancer, and Curve routing-wise. For better or worse, the playing field on Starknet is more level because existing protocols cannot simply deploy code they have already written for L1. This makes it a better environment to pursue longer-term, more innovative protocol ideas without having to launch aggressively with a token.

Don't forget Cairo

Cairo already has a dedicated section in this article, so we won't repeat it here, but the language excels at combining gas efficiency (through Sierra and its provability) with high-level abstractions. These aspects make it a great language for more right-curve protocol designs.

Decoupling from the slow Ethereum EIP cycle

Another consequence of using Cairo is that the Starknet community is not at the mercy of the EIP consensus cycle. For example, Account Abstraction became widespread on Starknet years before it saw adoption on Ethereum mainnet. We saw many early experiments on account abstraction, including arcade wallets using keychain access on Apple hardware, fund management applications that use account abstraction, and fairly rapid innovation in the wallet stack.

Examples

These benefits are not just theoretical; here are 3 examples of protocol designs that become viable while building on Starknet:

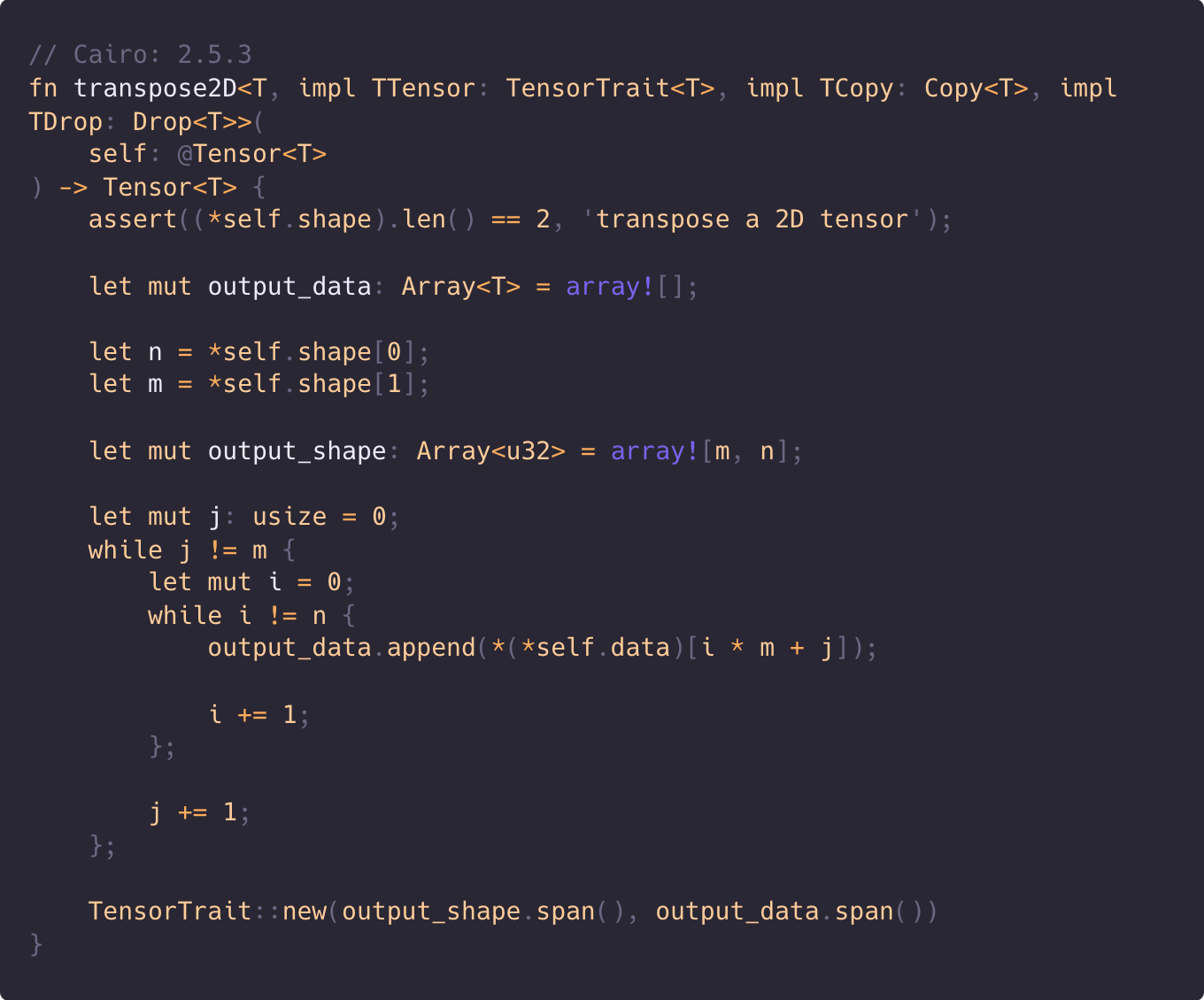

Orion is an open-source framework for supporting zkML built by Giza. It supports TensorFlow-style linear algebra abstractions abstracted to work with generic tensors. Here is an example of transposition:

Kakarot is an entire ZK-EVM written in Cairo. Each instruction is implemented using Cairo code written in a style that is not distinct from other EVM implementations built on traditional hardware:

Ekubo is a concentrated liquidity AMM with a singleton architecture and extension support (read: Uniswap v4 style AMM). Its author, Moody Salem, was a co-inventor of v4, and it's telling that he chose Starknet/Cairo to develop the next-generation AMM.

Building extensions on a validity rollup means that far more complex calculations are viable (such as dynamic pricing, LVR reduction, etc.), while coprocessors can be used to compute over historical data. It's likely that not only Ekubo itself will prove groundbreaking, but a Cairo extension written for Ekubo will, too.

But arguably, by far, the most far-out, forward-looking category is Autonomous Worlds, which we touch on next.

Autonomous Worlds

Autonomous Worlds have been thoughtfully defined as worlds that exist on a blockchain substrate and therefore exist independent of a centralized maintainer by ludens:

Autonomous Worlds have hard diegetic boundaries, formalised introduction rules, and no need for privileged individuals to keep the World alive.

This property of autonomy is in strict contrast with the asset-driven “NFT enhanced web2 games” of yesteryear and the “metaverse,” which emphasizes sensory interfaces over substance.

An autonomous world can be as large as an infinite procedurally generated multi-dimensional interplanetary system or as small as a set of bits.

The benefits of using blockchains aren't just philosophical; here are two ways autonomous worlds are qualitatively different:

They blur the game creator and the game consumer (a sort of natural generalization of the Minecraft and Roblox effect);

They make games composable within themselves, with other games, and with other backspace-adjacent infrastructures such as financial primitives.

But the challenges they have to overcome are equally significant: the difficulty of creating a fun gameplay experience without turning the game into a speculative casino (that many assetized products naturally evolve into) and the computational limitations of blockchain applied to one of the most compute-hungry use cases in existence.

Some of the early autonomous world builders recognized the need for scalability and chose Starknet early on as their L2 scaling solution. They've not only built Starknet into a hub for gaming but are also now shaping the network and its community in ways that reinforce its leadership in the autonomous world's category.

Perhaps the best illustration of an autonomous world is Realms. Built by an enthusiastic community as an extension of the Loot primitive, Realms has evolved into a foundational world itself with the following projects: