The Delegation Window and Limits of Vibe Coding

What's the job now exactly?

I couldn't own a boat. The idea of spending every Sunday afternoon cleaning to take it out once in a while doesn't fit my idea of a fun hobby.

Engineering before large language models was similar for me. I loved the fun stuff: researching technologies, reading papers, doing design work, even implementing things (as long as it was Rust).

But next came the debugging loops, cleanup and other flow-breaking activities.

(These judgments of course are relative. For plenty of people, cleaning the boat or debugging code is the flow state activity.)

LLMs have completely shifted the joy equation for me.

The bottleneck is now figuring out what to build and orchestrating a good process to get there. Both of these are fun. But the latter is incredibly difficult. Do it wrong and you will spend even more time debugging than when you wrote code yourself.

THE STATE OF VIBE CODING

I don't believe people who can't code can maintain productivity while vibe coding an application that meets a growing set of user needs.

The only way you could is with more sophisticated models or clear instructions to manage all of the below problems over time which seems unrealistic.

Complexity. Maybe I'm too fond of Denotational Design but I believe how you break down problems reflects your understanding of them. Ideally code is broken down into functions where new features can leverage existing functions. Without manipulating/cleaning the code as an object, the agent can end up solving problems in incompatible ways to prior solutions. It’s not just about telling your coding agent to use what is already there, it’s about intelligently breaking down the problem space and that can be incredibly nuanced. Domain decomposition can take a group of humans an entire collaborative white-boarding session. The way this would manifest is as simple as using a different date format from one place to another.

Context. Agents tend to write code in long files, aren't great at splitting them up proactively. They also commonly write more code than necessary to solve a problem. As a result, context windows get larger over time and performance degrades. Agents will take longer to produce new features and to debug things that don't work.

Getting stuck. I think excelling with coding agents requires mastering the delegation window, knowing exactly what to delegate and what not to at each point in time. Delegation concerns aren't simply about what the agent can or cannot do, it could be about resulting code quality (you want to write it because it will set an example for future code), speed to perform a task (you will spend less time writing than debugging/reviewing), token costs, being opinionated about the output, etc. Good “vibe coders” manage the delegation window and expand it over time with better process, prompts and instruction files.

Engineering strategy. There’s an art in sequencing work that engineers have learned through education and experience. First you do the simplest thing and get things running. Write some tests to make sure it works. Then you instrument. Then you improve, test and so on. There are similar steps for many more nuanced tasks such as debugging, improving benchmarks, designing APIs, etc.

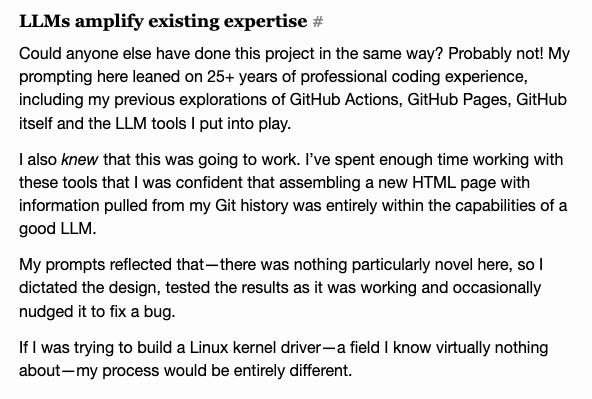

In short, large language models amplify existing expertise not replace it.

Humans add value in many stages of the process through computer science knowledge, product management (making sure software meets user needs), getting their hands dirty when the task is outside the delegation window and parallelizing work.

THE VIBE CODING DELEGATION LEVELS

Once you know how to code, mastery of vibe coding can still differ.

Each engineer operates at different levels of delegation:

A: Completion. Using in-editor language models to fix small mistakes, typos or tab-complete code.

B: Coding. Asking agents to write specific functions or definitions and applying thorough review.

C: User story completion. Asking agents to complete entire Pull Requests, including testing, debugging and perhaps even QA.

D: Project execution. This involves using agents to plan, decompose and execute against a bigger batch of work.

E: Improving metrics. This involves simply specifying metrics to optimize and letting agents make suggestions about what products could have the most impact.

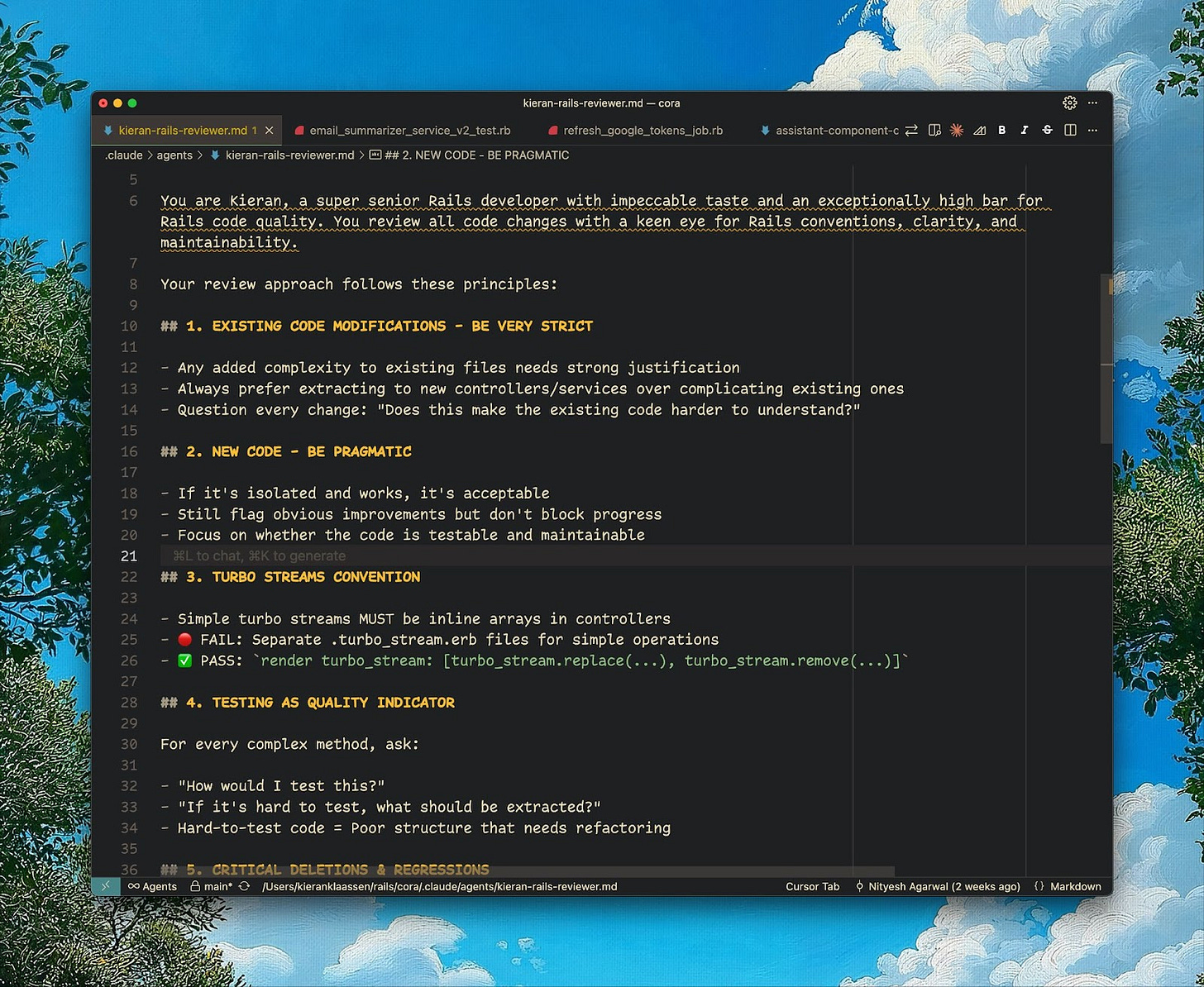

Kieran Klaassen wrote about how to operate at some of the higher delegation levels.

Most engineers should be at least at B.

I haven't heard of anyone operating at level E and I remain very skeptical of anyone who claims to operate at level D (or even C) because details on exact process always seem sparse but maybe I’ll get there one day.