The Sequencer Revenue Party is Over

The post EIP-4844 revenue strategy landscape for rollups.

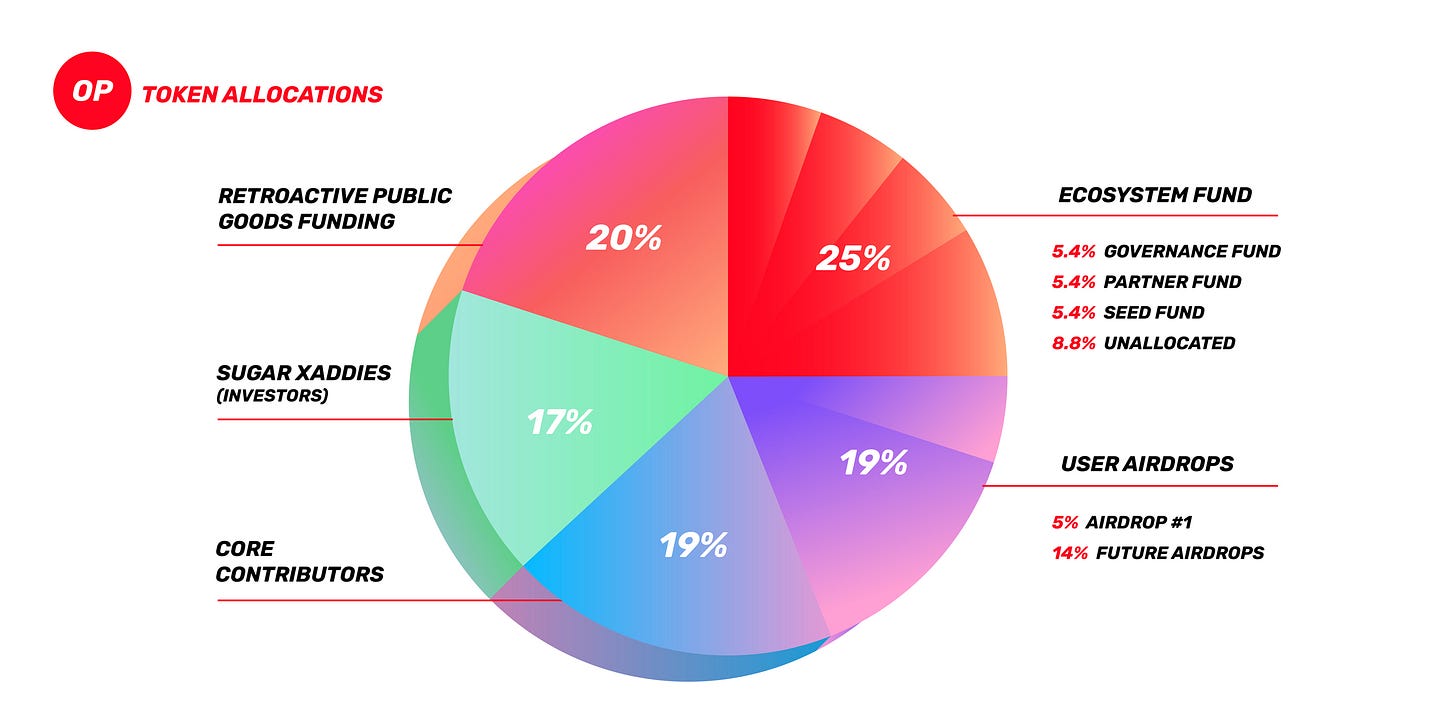

Now that RPGF3 is over and distributed more OP tokens than expected…

Builders have caught on to the idea that these rounds may actually provide important sources of revenue even for small teams. Carl Cervone wrote a helpful thread on this.

One of my favorite “home pages of crypto” Kiwi News got a significant allocation which you can read more about in Bootstrapping Kiwi and illustrates just how impactful RPGF has become.

However, some have questioned the sustainability of RPGF and either worried about sustaining it entirely through OP collective sequencer revenues or even argued for burning of unallocated tokens.

Perhaps an even larger problem than a temporary mismatch between current sequencer revenues and the RPGF grantflow is the coming 100x reduction in rollup costs via EIP-4844.

The key idea with EIP-4844 is to introduce an entirely new mechanism of data storage and its own market that decouples it from the costs of more permanent data availability storage solutions.

To understand it we first have to recap how rollups work.

How rollups “store” data today

The first reason why rollup transactions are cheaper than L1 transactions is that they are batched together and compressed.

This has contributed to an intuitive understanding of rollups, but a more important source of efficiency is the fact that rollup transactions don't get actually executed onchain.

Instead, rollups simply store their transaction data state roots onchain.

ZK rollups get around the costs of execution by submitting proofs of execution instead.

Optimistic rollups get around the costs of execution by being optimistic. They only execute parts of transactions to prove that an incorrect state root was submitted.

Rollups are said to use “Ethereum calldata for data availability”.

What this means is that rollup contracts get called by their sequencers with batches of transactions. Since every full node has to remember each transaction and its calldata to reconstruct the state of the chain, this data is considered to be “available”.

Is there currently anything cheaper that could be used onchain?

Not really. For example you could consider storing this information in events but to make the data available in an event it has to go through calldata anyways.

Calldata is basically the tunnel through which any data enters the Ethereum Virtual Machine.

This even includes external data like that used to update prices in Chainlink oracles.

The idea of EIP-4844 is simple: introduce a new way of sending data alongside transactions that is not persisted for more than two weeks.

This so called “Blob” data won't even be available in the EVM, and that's precisely what makes it cheaper to submit by the sequencer.

For more of a deep-dive, I really enjoyed this post by Kaartikay Kapoor.

Economic consequences

If the L1 costs of batch submission go down, it’s likely that sequencer profits will decline as well if current margins are preserved.

Since EIP-4844 will be available to all rollups and be effective at both large and small scales of transaction volumes, market pressure will drive margins to an equilibrium where sequencer profits will initially be significantly depressed.

Do they bounce back?

There are several philosophies to get out of this dilemma in the medium/long-term.

1) Extract MEV

A similar debate occurred between Arbitrum community members last April.

For sequencers that order transactions based on a FIFO ordering or priority fees in a private mempool, money is left on the table.

While it is a complex decoupling, you could argue that MEV extraction is fueled more by intrinsics of economic volatility and ordering-sensitive activity in a chain rather than gas costs and therefore could be pursued as a resilient source of revenue.

Unfortunately that idea is not very popular due to the regulatory & trust challenges associated with extracting MEV from users in a private mempool.

2) A return to protocol fees

Since charging protocol fees is becoming more popular, the drop in blockspace costs may directly favor protocols, especially ones that benefit from increased transaction volume in their fee models (such as Zora).

This could move slightly shift market power away from general purpose rollups that operate as developer platforms towards appchains.

3) Focus on the token

Messari suggested in a paywalled article that a return to L2 tokenomics as a primary driver could provide a more sustainable source of revenues.

Optimism seems on a positive trajectory there, having already tied sequencer revenues in a flywheel with OP disbursement.

However, a story where the token is fueled by the cash flows associated with a growing sequencer revenue stream was much cleaner and didn't require as much reliance on a reflexive or governance-based view on token prices.

I'm obviously not a fan of closed systems where the token is the primary value driver.

4) Rollups as current accounts

But ironically maybe the most interesting solution comes from Blast which was marked as a speculative L2.

Sure, the idea that users just get native yield from deposited tokens makes it seem speculative.

But the idea of nontrivial wrapping on the L1 to L2 deposit layer has more potential.

Rollups could aggregate user voting power or even take a share of the yield themselves.

The chicken & egg use case dilemma

The third lens and perhaps most speculative is to examine the substitution effect overall.

Yes, in the short-term we could go through a sequencer revenue winter with more rollups becoming insolvent (like PGN) and/or consolidating, with only the “Amazons” of the rollup world surviving by hitting enough scale.

While we focus a lot on L2 competition, one could see blockspace as competitive to offchain compute and/or payment activity that is not crypto intermediated.

From that point, lowering sequencer revenues should lead to new types of smart contracts being viable and an overall increase in the volume of transactions.

Order of magnitude reactions tend to lead to qualitatively different possibilities and this could be true in use case enablement also.

So while we often complain that blockspace is already under-utilized and we need more use cases, perhaps we could recognize that causality goes in the other direction too.

Even cheaper L2 blockspace could by itself enable new use cases which could then necessitate more reductions in L2 blockspace costs.

Perhaps what we needed was better infrastructure all along.

And EIP-4844 could be it.